Identify unnecessary jobs, inefficient data pipelines, and suboptimal resource utilization.

See value in

3 easy steps

Monitor

Databricks utilization

Discover recommendations

Take action,

reduce costs

The Lakehouse Optimizer provides you…

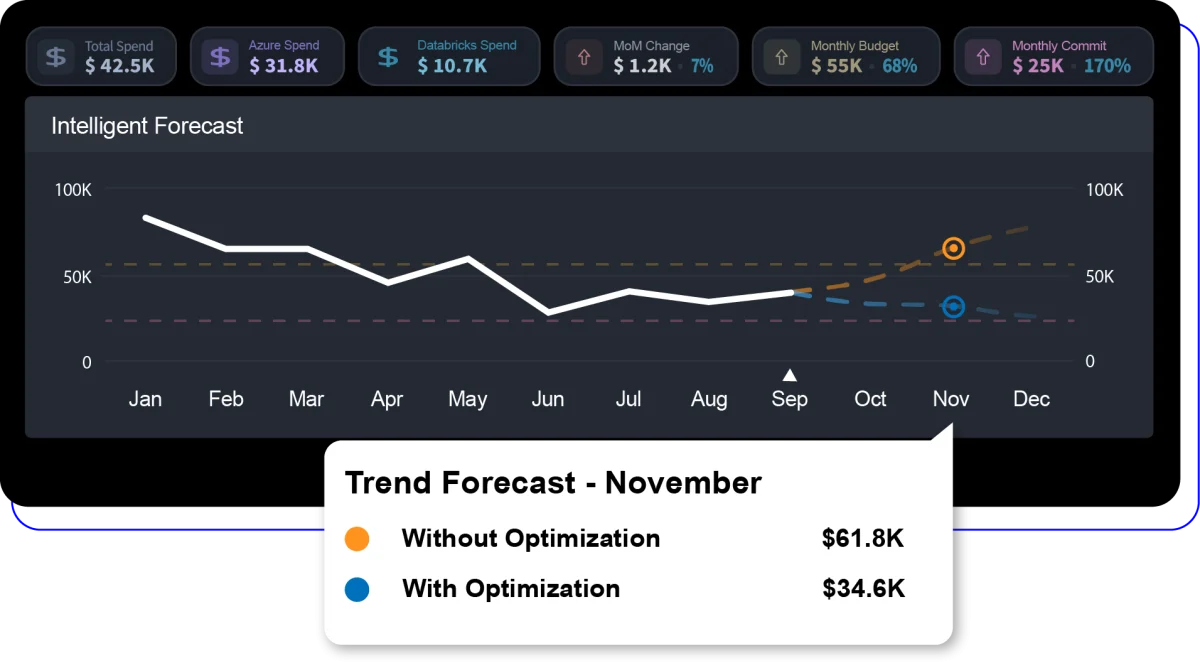

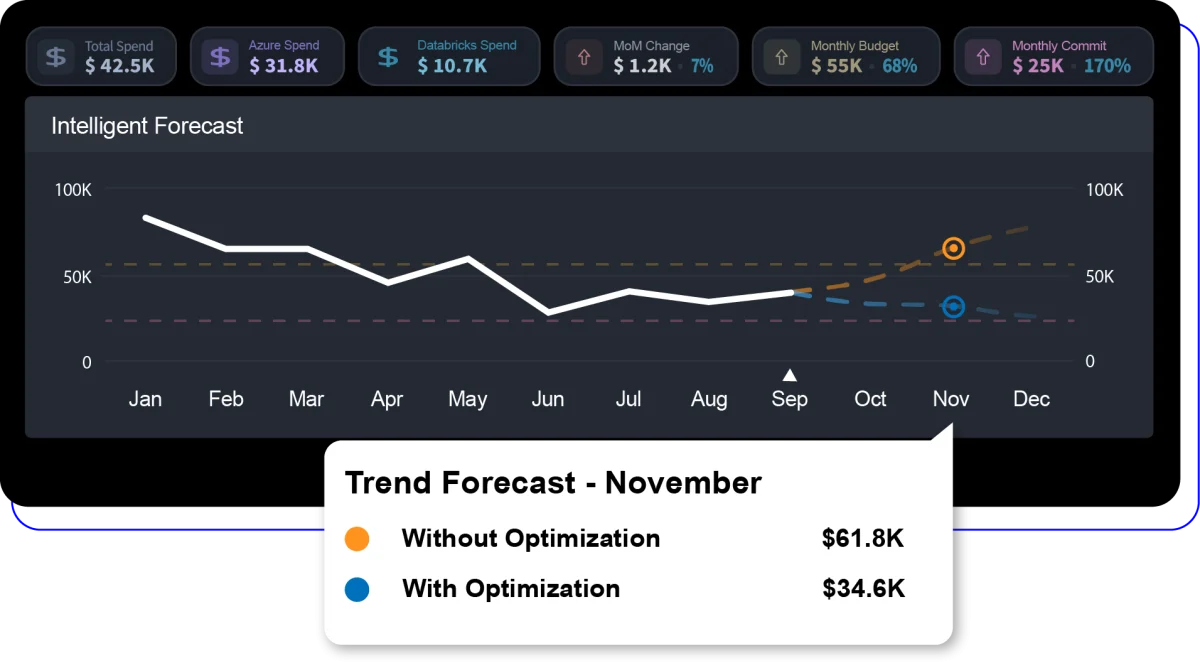

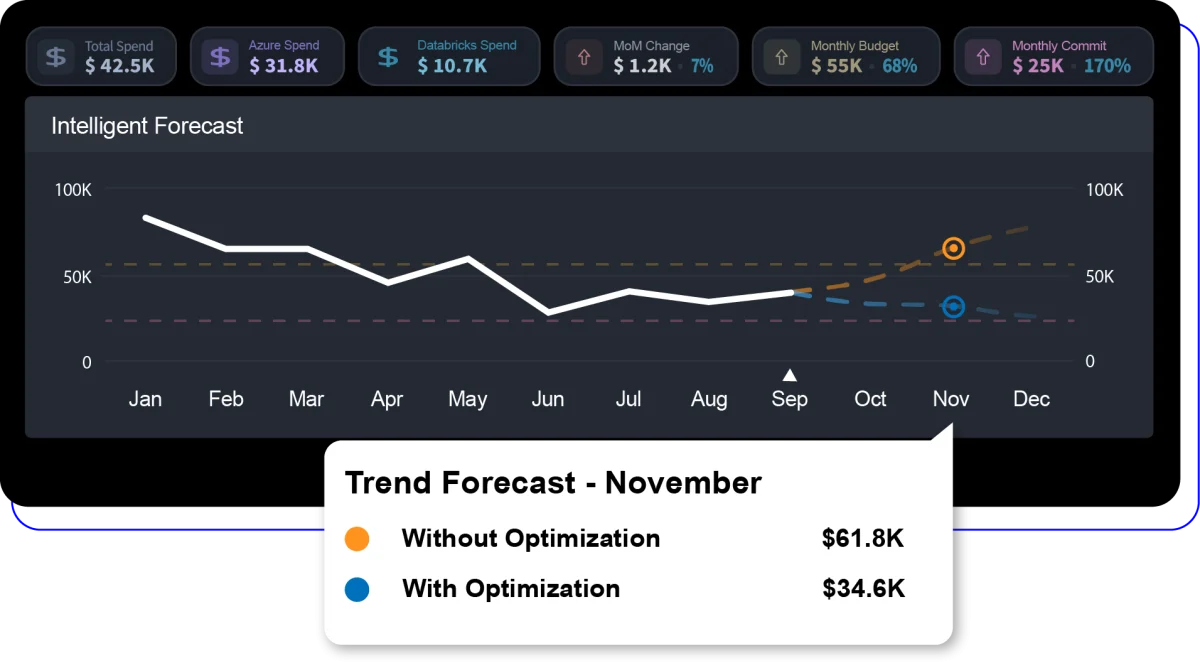

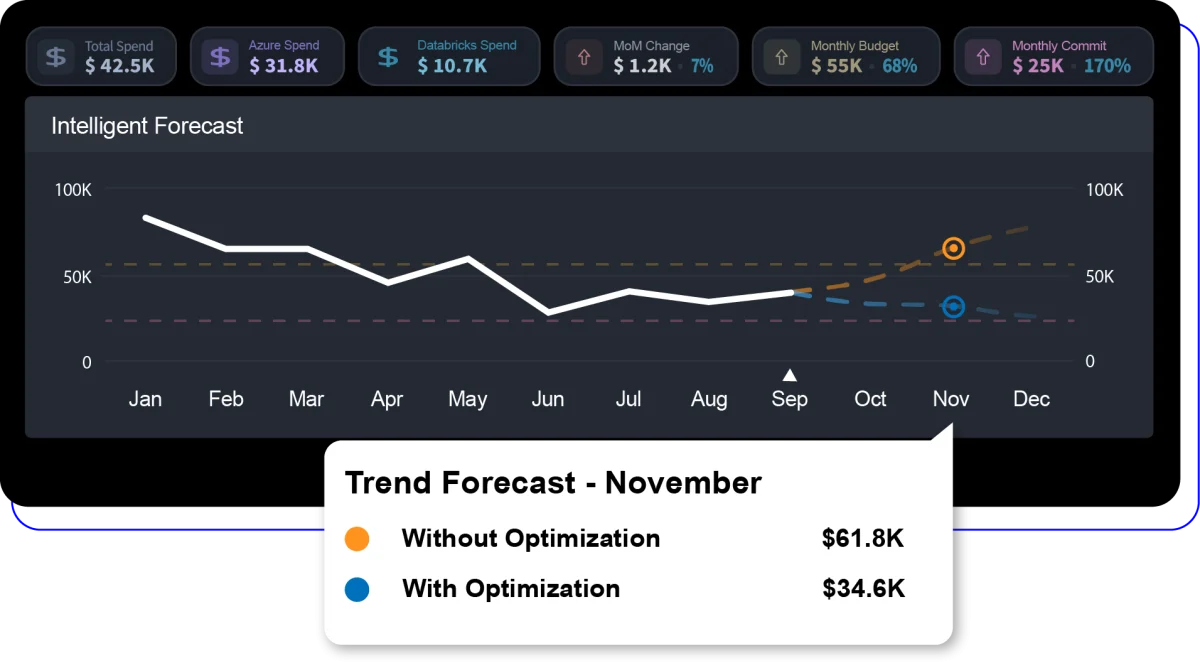

Intelligent forecasting:

Receive financial predictability and stability of your organization’s spend

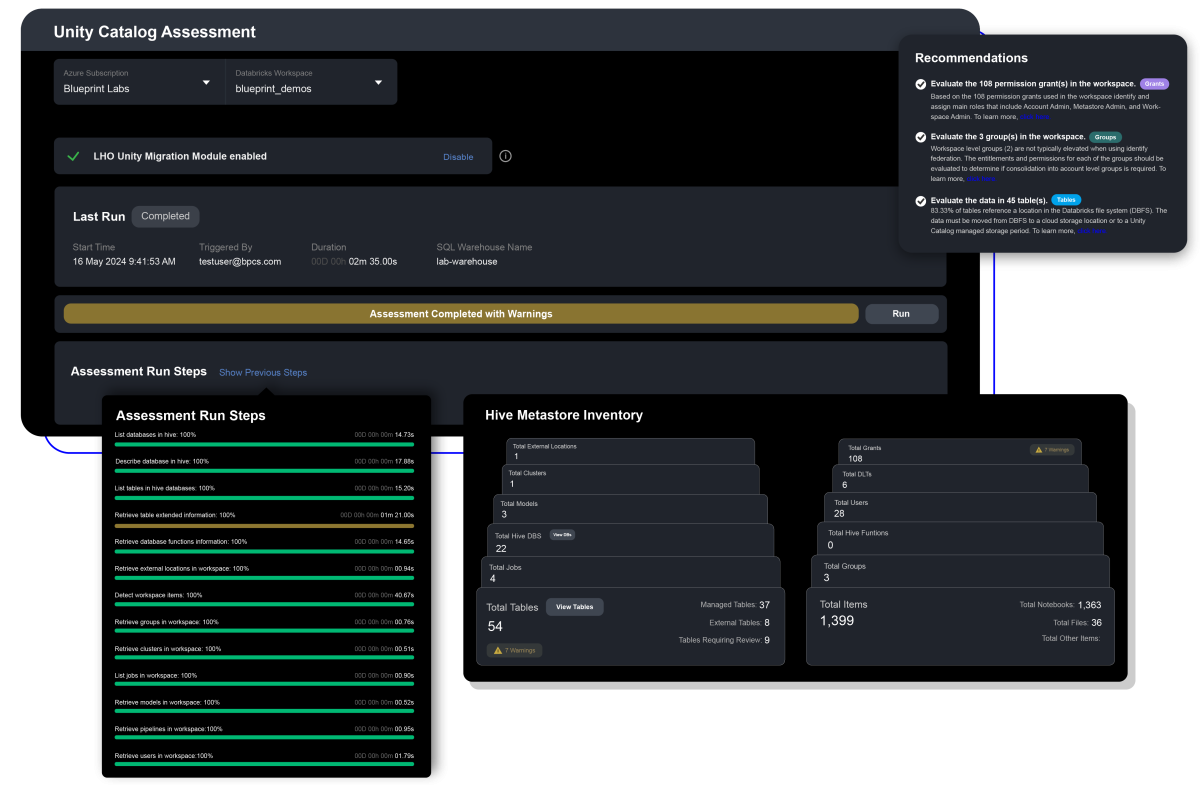

Unity Catalog migration assessment

Evaluate the current state of your Databricks environment to determine its readiness for migration to Unity Catalog and identify the necessary work for the transition.

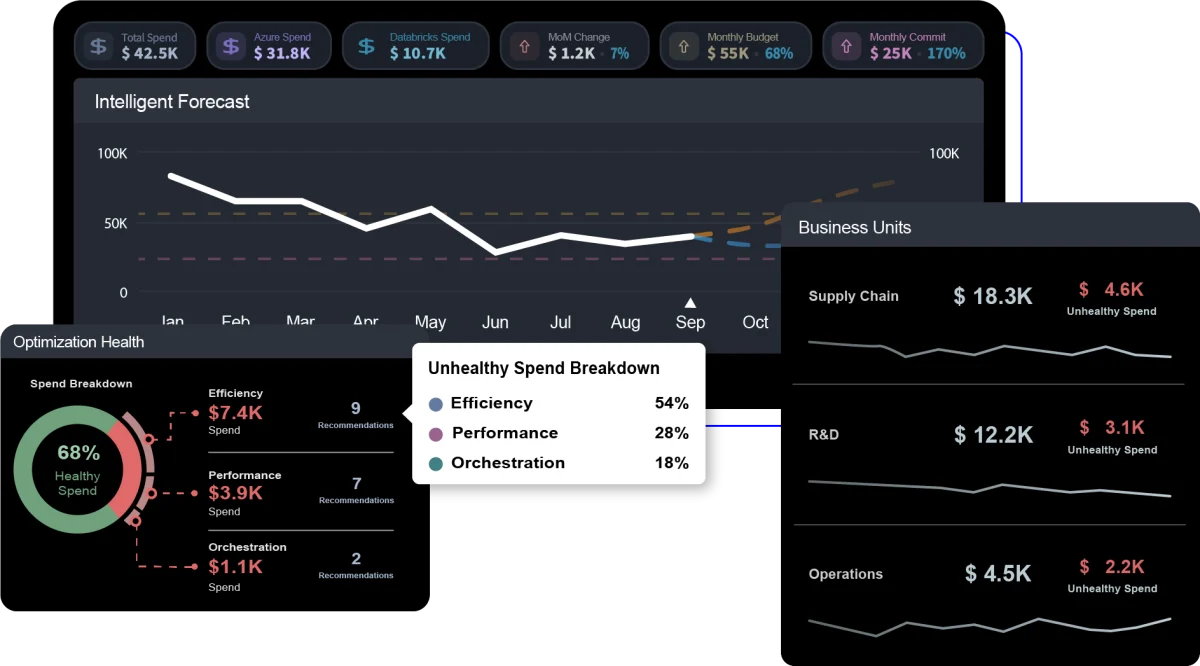

Executive insights engine:

Ability to allocate cost and measure performance by department, project, and initiative

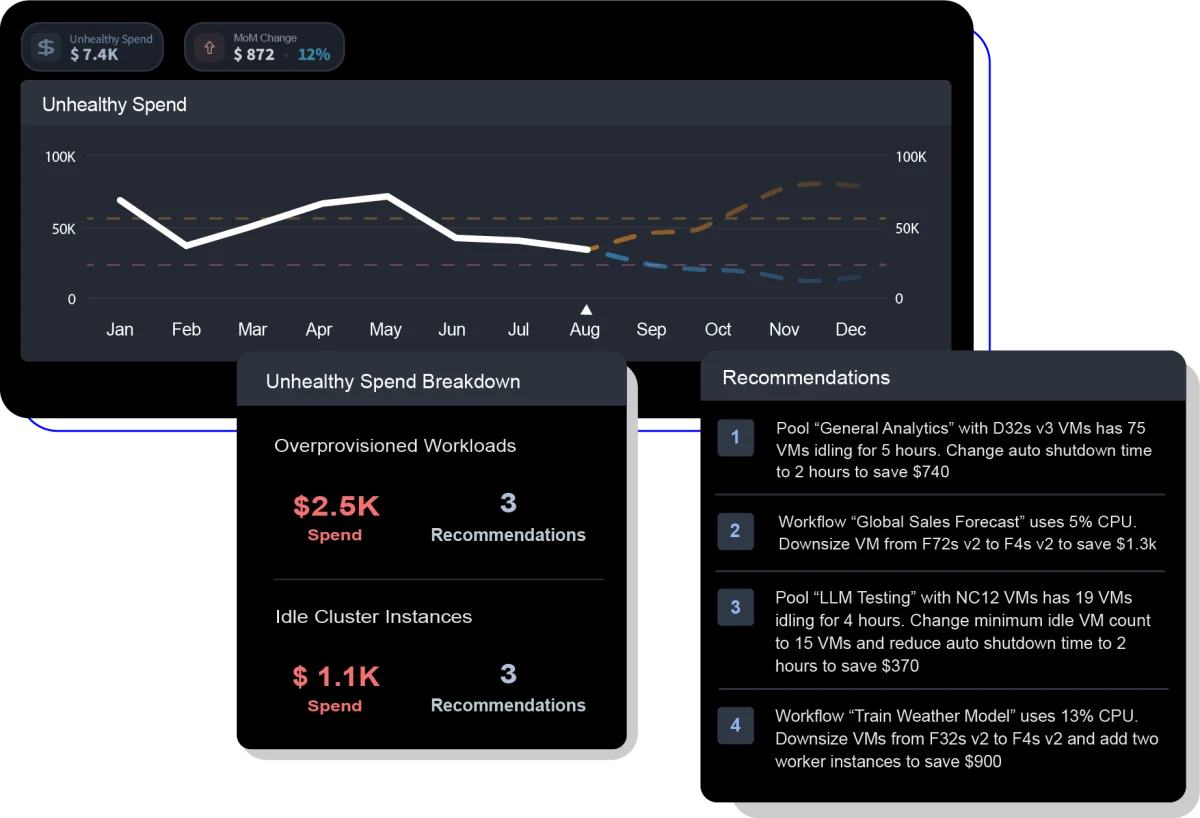

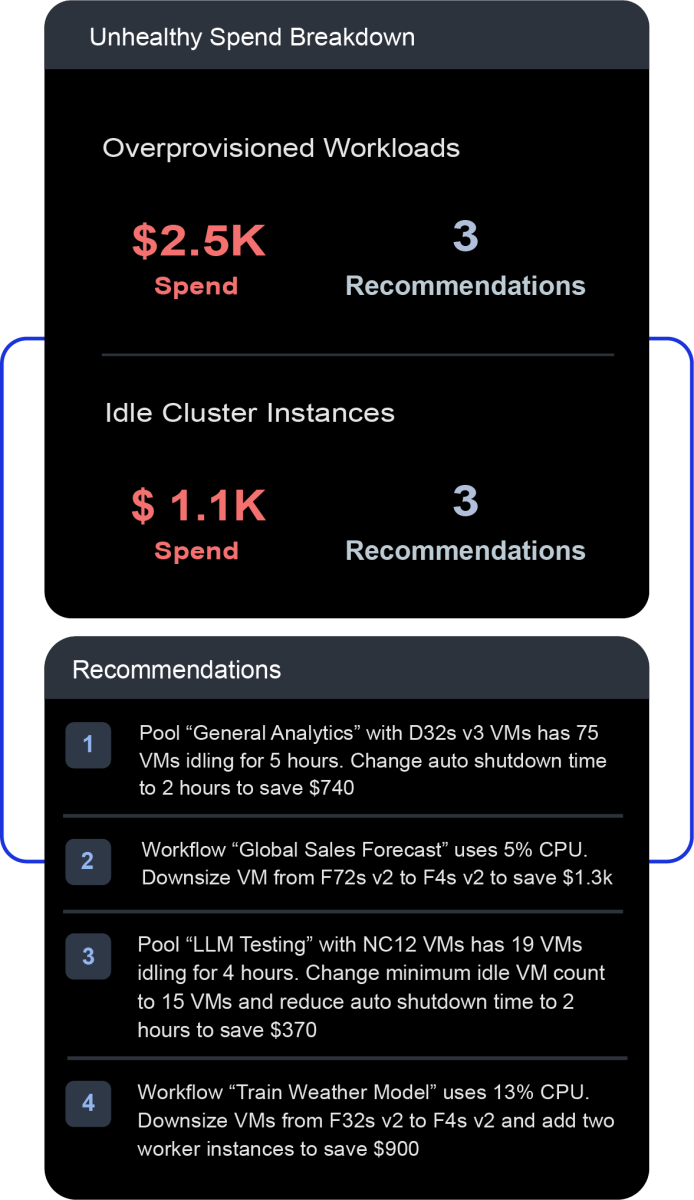

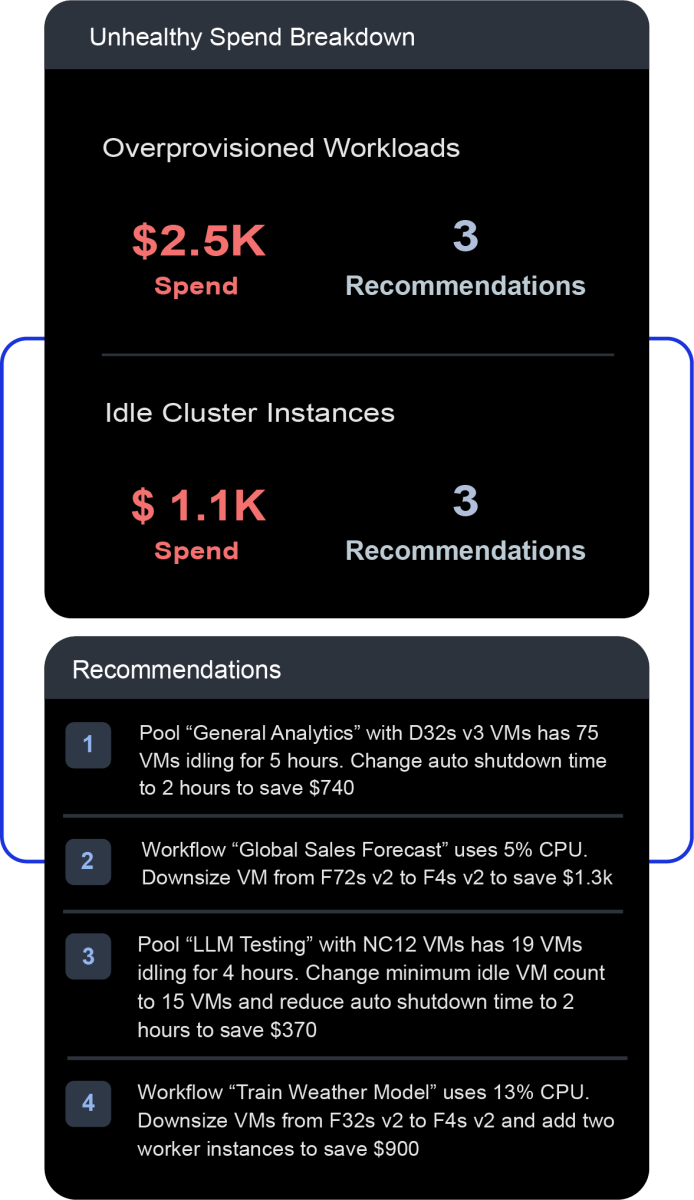

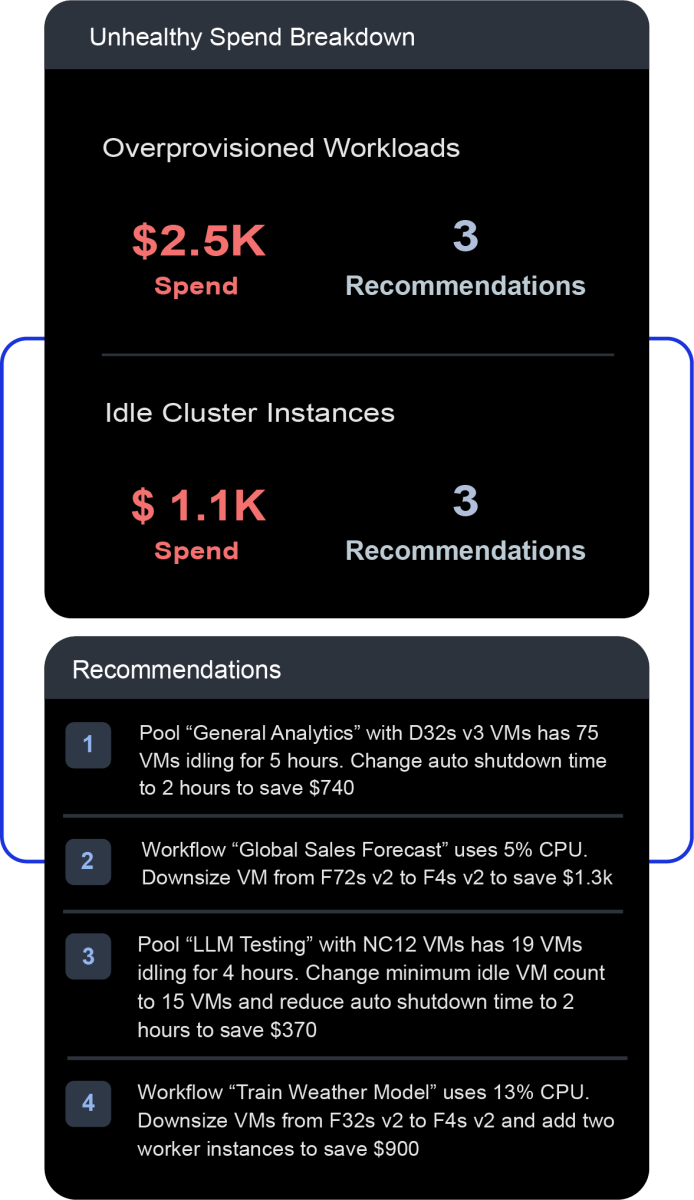

Intelligent recommendations:

Receive specific, actionable recommendations tailored to your organization’s environment

Pool contention reporting:

Understand how to minimize latency for new workloads and maximize throughput

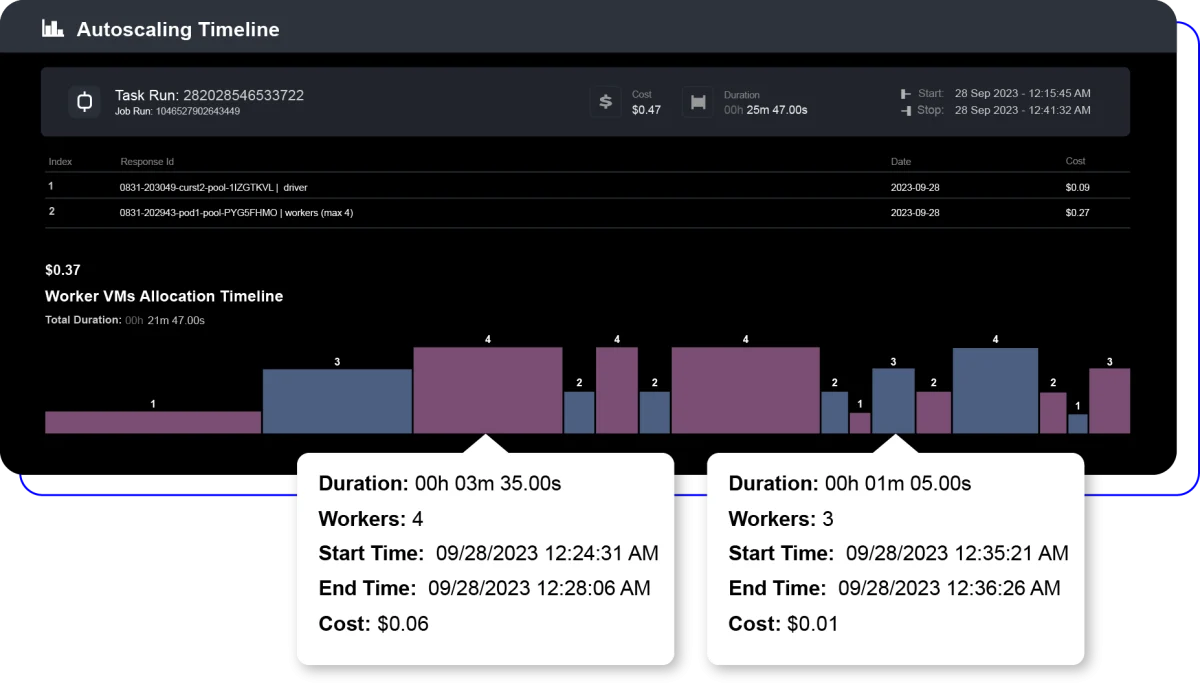

Autoscaling insights:

Identify inefficiencies and optimal autoscaling policy configurations

Common challenges → Big wins

LHO's practical impact on your business

- Monthly bill management

- Monitor budget

- Cost management

- Performance tuning

Engineering leader receives an unexpectedly high bill

Scenario

LHO solution

Monitoring budget against forecasted spend

Scenario

LHO solution

Cost management and optimization

Scenario

LHO solution

Performance tuning of data pipelines

Scenario

LHO solution

- Monthly bill management

- Monitor budget

- Cost management

- Performance tuning

Engineering leader receives an unexpectedly high bill

Scenario

LHO solution

Monitoring budget against forecasted spend

Scenario

LHO solution

Cost management and optimization

Scenario

LHO solution

Performance tuning of data pipelines

Scenario

LHO solution

Engineering leader receives an unexpectedly high bill

Scenario

LHO solution

Monitoring budget against forecasted spend

Scenario

LHO solution

Cost management and optimization

Scenario

LHO solution

Performance tuning of data pipelines

Scenario

LHO solution

Michael Hallak

Director of Product Sales

Right-size compute resources and optimize storage costs to eliminate waste in your environment with the Lakehouse Optimizer.

Achieving unified governance for data and AI

Unity Catalog migration powered by LHO

Is migrating to Unity Catalog on your to-do list? This webinar will leave you with a comprehensive understanding of how to efficiently migrate and optimize your lakehouse for good.

Additional resources

Frequently asked questions

The Lakehouse Optimizer is available for a 30-day Free Trial in the Azure Marketplace to deploy on your own.

You can follow this guide for assistance. If you would rather have a guided experience, Blueprint can help you through your AWS or Azure deployment to get you up and running.

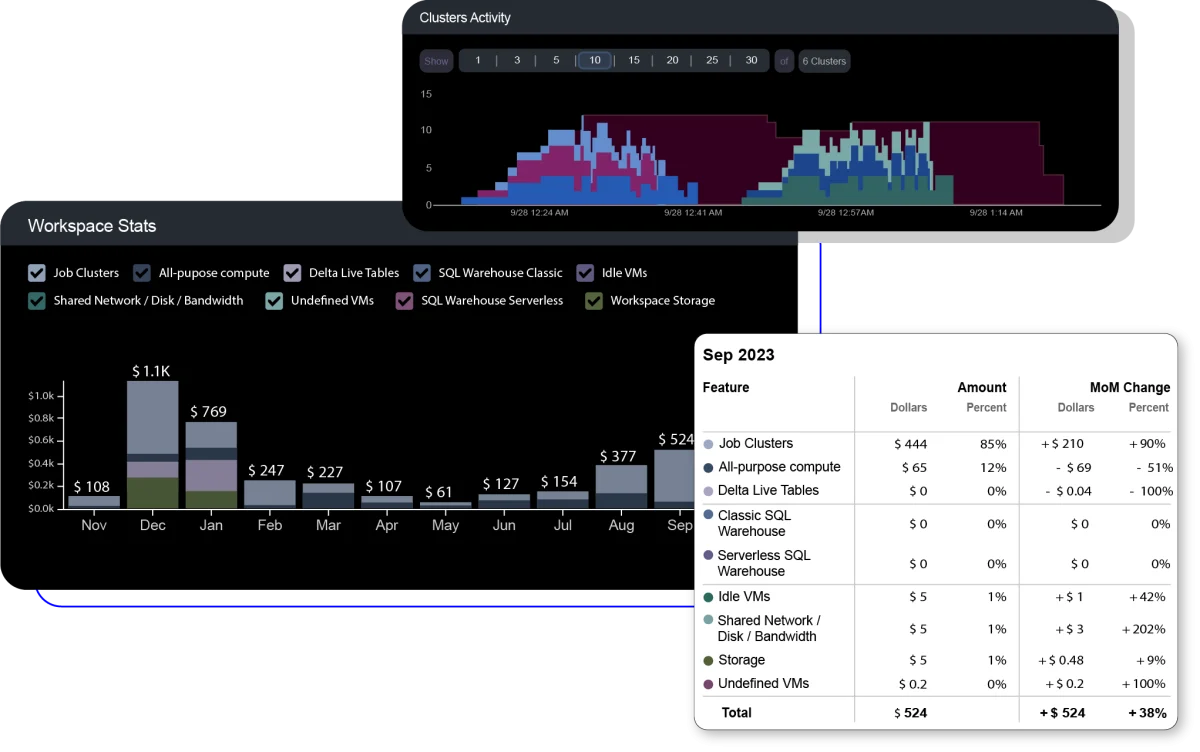

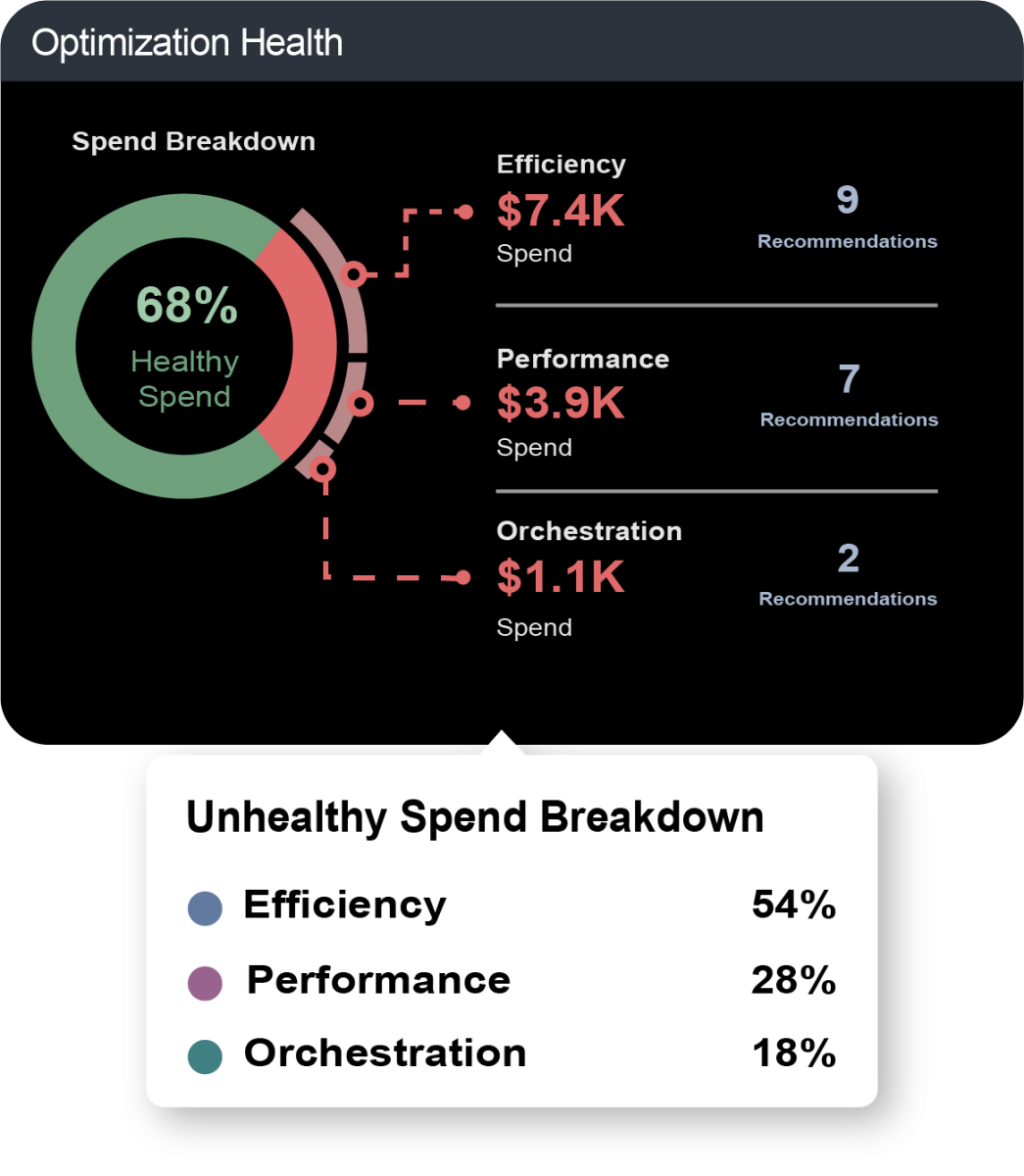

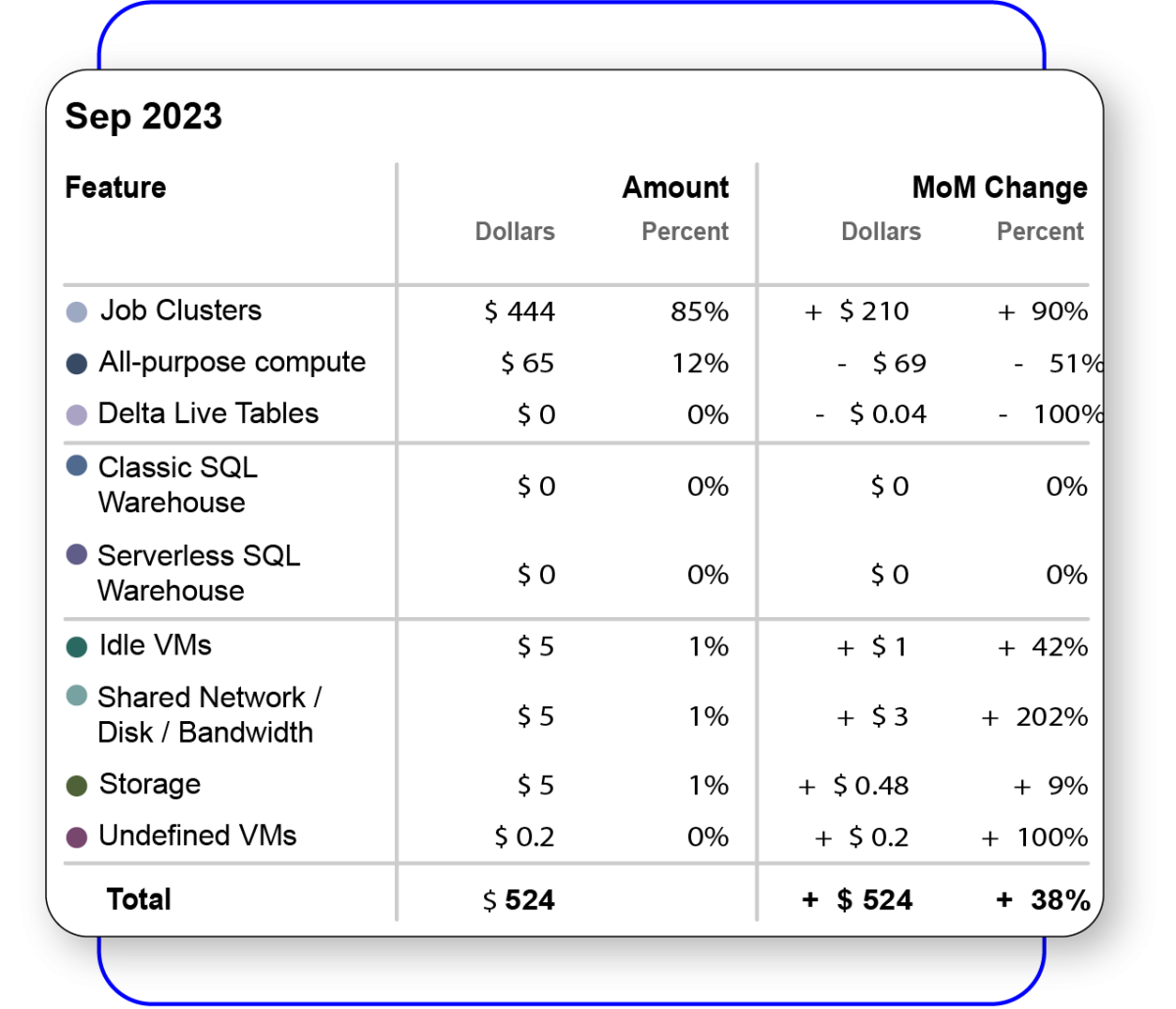

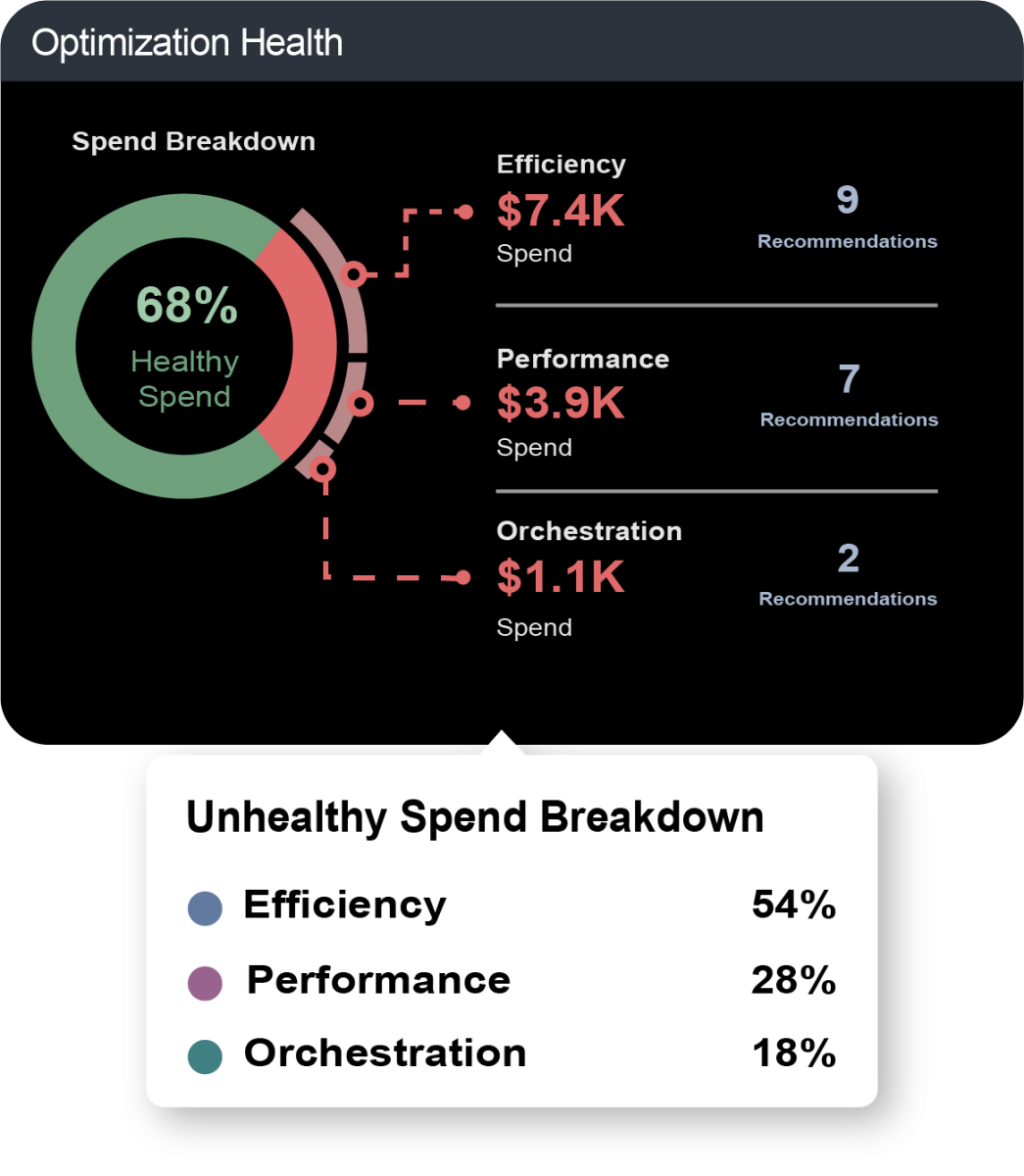

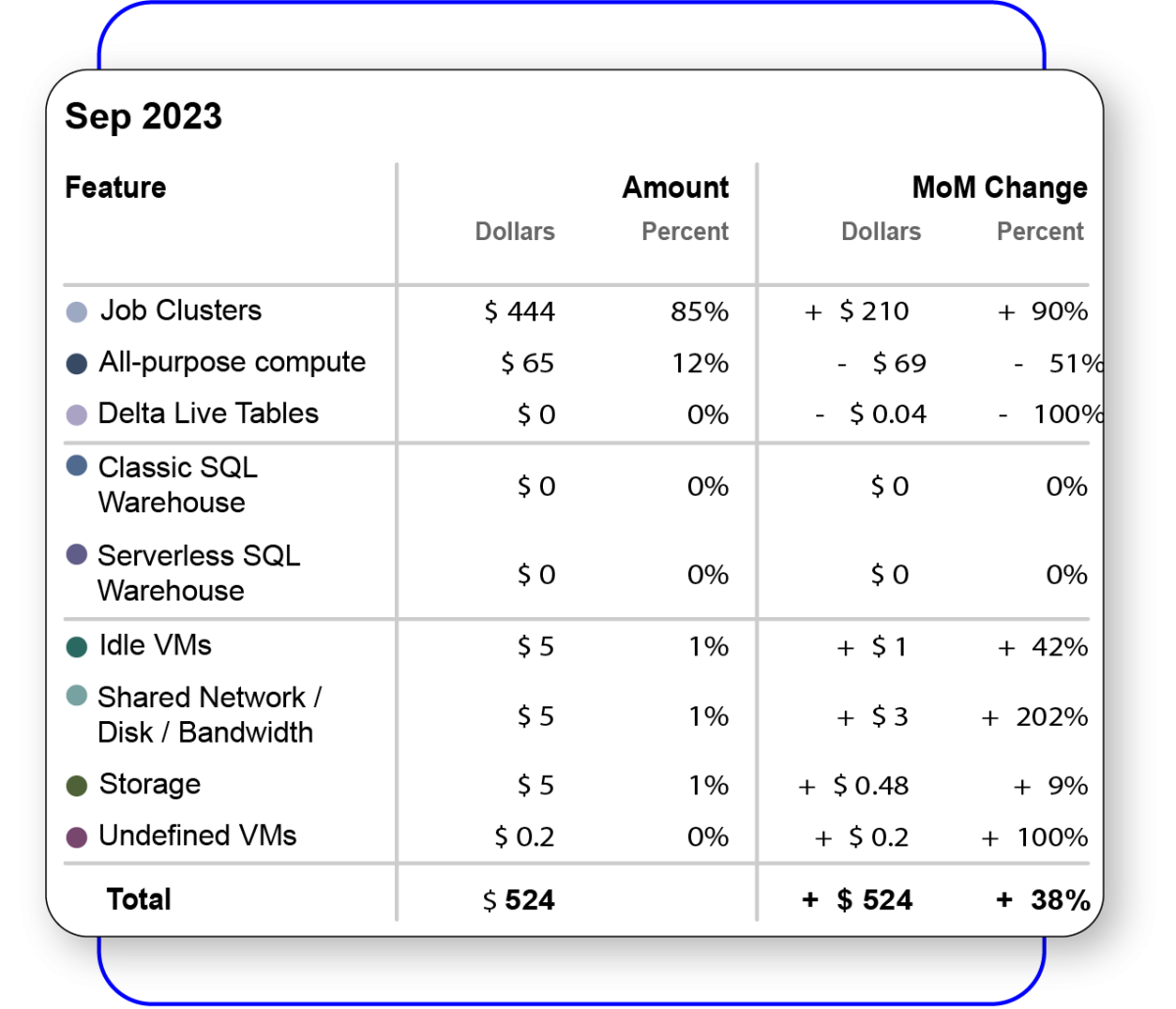

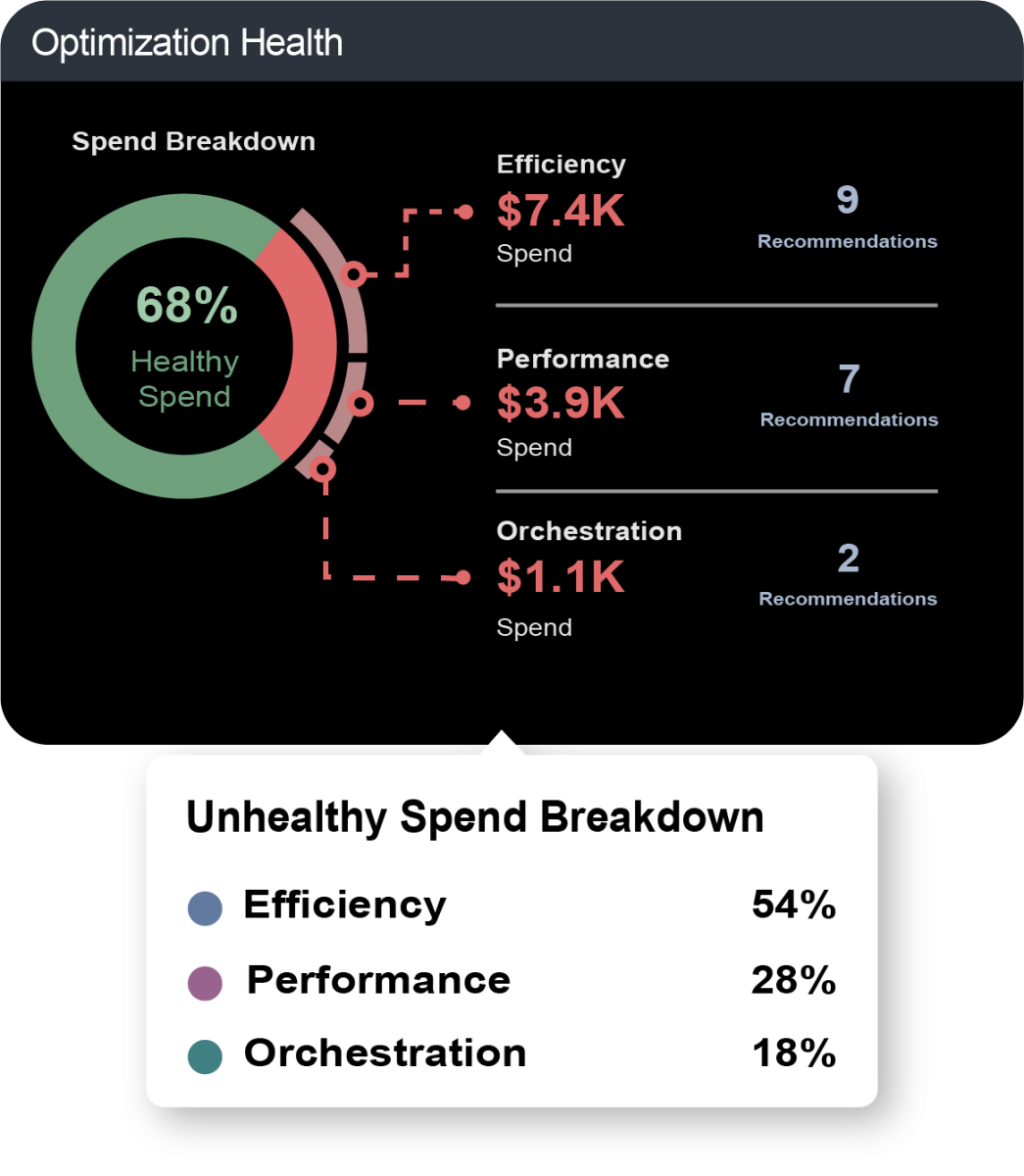

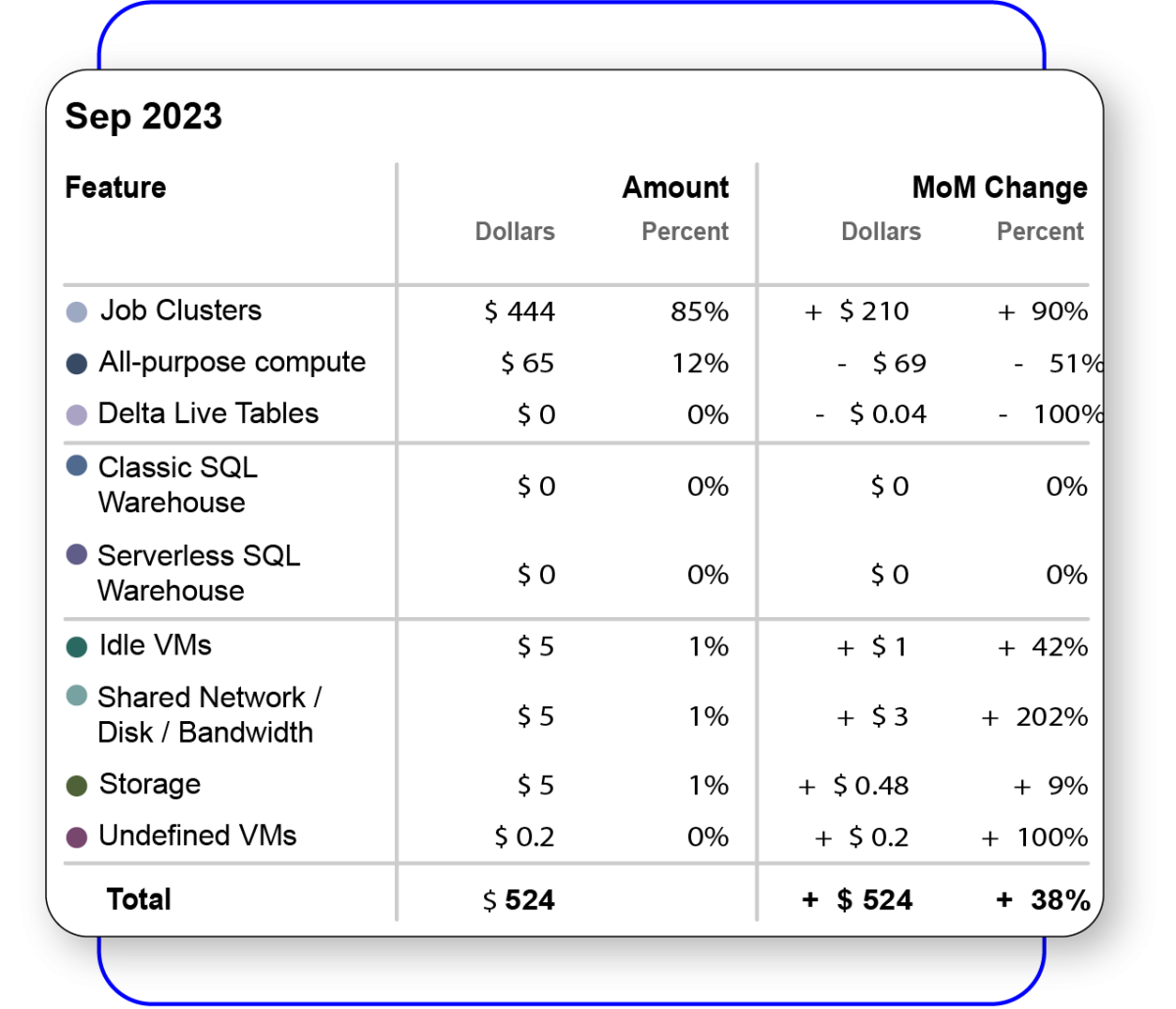

With LHO you can see not only your spend, but understand if its healthy or unhealthy, while getting transparency across the organization for the attributed spend. You can even drill all the way down to individual autoscaling events or code block execution of an individual job run for a workflow executed and see both Azure and Databricks costs represented.

The Lakehouse Optimizer is most commonly used by managers to receive alerts about critical issues, such as nearing budget limits, unexpected cost spikes, or unusual spending patterns. These alerts help managers quickly identify and address potential problems.

For developers, the Lakehouse Optimizer is often used while writing data pipelines. As they write code, LHO guides them in properly configuring clusters and selecting the right resources. This ensures that the code is optimized for the resources needed and that the appropriate tools are used for each task.

Absolutely.

The Lakehouse Optimizer offers features that help you identify relevant data and optimize your jobs or workflows. It provides detailed recommendations, total cost analysis, and other insights that guide you in making the necessary adjustments for improved performance and efficiency.

If you’re focused on specific jobs or clusters, start by reviewing the ones you’re responsible for and see what insights the Lakehouse Optimizer offers. Check if there are any recommendations for optimizing performance or reducing costs.

For those overseeing broader operations, it’s helpful to begin with a high-level view of your tenant or workspace. This will give you a clear picture of where spending is concentrated within Databricks and your cloud provider, providing a strong starting point for further analysis and optimization.

The Unity Catalog Migration Assessment evaluates your current Databricks environment to determine its readiness for migration to Unity Catalog. It also identifies the specific steps required for a successful transition. With Blueprint’s proven expertise, we have achieved a 100% success rate in Unity Catalog migrations.