The problem

Businesses choose Databricks as their data platform because the Lakehouse provides a single place where data can easily be processed, explored and transformed. This company recognized the potential value and migrated and configured their environment correctly, but once it was running, they didn’t see the immediate return they expected. They were racking up hundreds of thousands of dollars in Databricks cost and wondering, “Where is this money going?”

“Priority number one for businesses is always to get what they need to run the business right now. They had set up their clusters correctly and done their jobs well, they just didn’t think of continual monitoring and optimization,” says Cori Hendon, Blueprint’s Senior Data Scientist. “Our job was to remind them of that.”

The Blueprint Way

After taking the time to understand the client’s environment, immediate needs and business goals, Blueprint was able to quickly hone in on several optimization opportunities, centered on their cluster configuration and overall lack of transparency into the cost and return on their Databricks usage.

In the Databricks Lakehouse, jobs are assigned to clusters, and if those clusters are turned on, the company is charged by the second, even if the machine is sitting idle. The clusters can be turned off and on depending on when these jobs need to be run, but many companies do not realize this and leave them on constantly. “It’s a case of leaving a cluster on all the time in case a request comes in versus turning it on only when there is a request. There’s very little difference in performance, but there’s a huge difference in cost. They saved an immediate $45,000 by shutting down just one persistent cluster,” says Hendon.

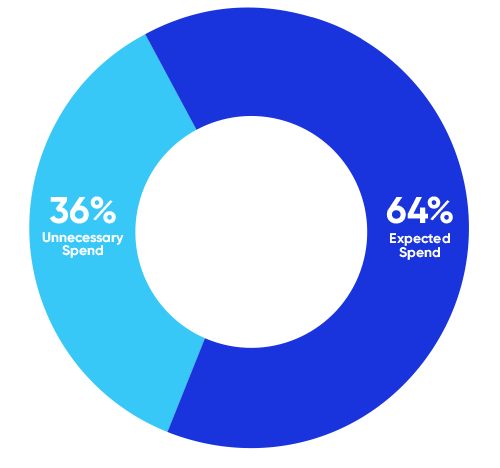

Another key area of optimization is right-sizing the clusters themselves. Clusters come in a variety of sizes, and their use depends on the complexity of the data jobs that need to be run on them, with larger clusters bringing higher usage costs. Our team of data scientists combed through the existing Databricks clusters to uncover how much pressure the clusters were under when running. For many jobs, the clusters being utilized were far too big, and thus far too expensive, for their immediate needs. By identifying these mismatches and moving jobs to appropriately sized clusters, Blueprint was able to deliver immediate savings of 80%.

Our data scientists also created dashboards and reporting systems designed to provide insight into ongoing Databricks usage. Armed with this new-found visibility, this company can now discover areas of optimization as they arise, as well as spot expensive mistakes and inefficiencies before they balloon costs. For example, clusters are often spun up to perform a heavy data experiment, but if they are left on once the experiment is complete, that waste increases spend pointlessly and understates the ROI of the entire Databricks investment.

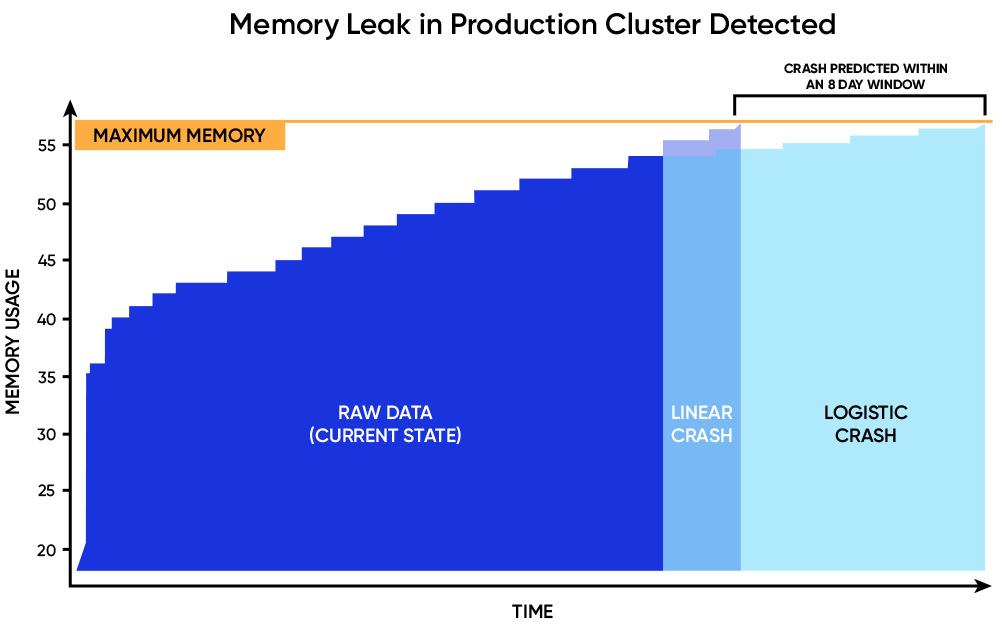

(n+1)

While our data scientists investigated the client’s environment, they discovered a previously unknown but critical issue. Their production environment was rapidly running out of memory and that memory leak would result in a complete shutdown of that environment in just eight days, leaving the company unable to service their customers. This was a completely unknown issue and was not what Blueprint was brought in to solve, but it was discovered through our diligent investigation processes. The warning was passed along to the client, along with a solution to fix the issue now and prevent it going forward.

With the urgent optimizations made and the potentially disastrous memory leak averted, this company is on solid ground with their Databricks environment. In addition to immediate usage savings in the hundreds of thousands of dollars, they are now equipped with the visibility and training to monitor, forecast and control Databricks usage, and thus spend, on their own going forward.