“The vision for this data platform is to have every data asset the company owns living in one place. We want it to be easily digestible and consumable for any employee ― this is all about enabling a self-service reporting model.”

The problem

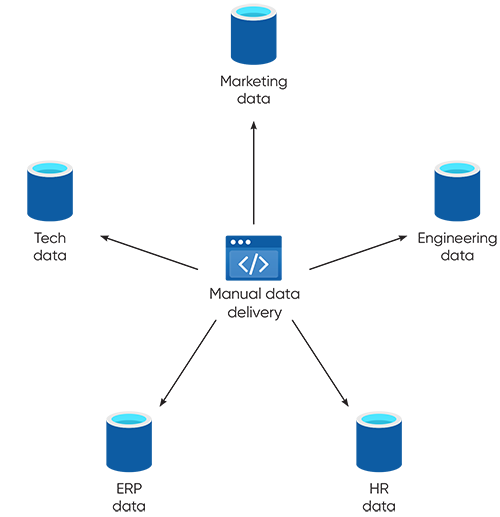

Over its one-hundred-year history including numerous mergers and acquisitions, an oil drilling company had accumulated more than 65 TBs of historical rig data, including sensor data and oil sample data, in addition to data from its financial, HR and marketing divisions. The company’s strategic vision for the future focused on technology innovation and automation. However, integrating analytics and data science to create insights from all that data was challenging because of the siloed nature of their various enterprise systems.

The IT department was stuck in the middle of the process, manually delivering data, which was at odds with the company’s vision.

Realizing the need to drive business with rapid, sophisticated data insights, the company partnered with Blueprint Technologies to aggregate data from multiple enterprise systems, including third-party systems, into a modern data estate to improve decision-making, planning and data delivery process automation.

“The vision for this data platform is to have every data asset the company owns living in one place,” a Blueprint developer said. “We want it to be easily digestible and consumable for any employee ― this is all about enabling a self-service reporting model.”

The Blueprint Way

Blueprint set out to prove, as part of a proof of concept, that a well-designed, comprehensive modern data estate could be built within the company’s technology ecosystem. This proof of concept was built to manage rig sensor data utilizing Databricks to engineer raw files into useful data, and an Azure Data Lake Storage Gen2 instance to securely store and maintain the company’s data in such a way that it could easily be queried using Power BI and Databricks. The proof of concept sought to determine that the solution could be easily scaled as the company obtained and created more data.

The 6-week POC demonstrated that the company’s highly complex sensor data could be securely ingested into an Azure Data Lake Storage Gen2 instance. This successful POC immediately led to the first of a multi-phased engagement to stand up a full modern data estate, deprecate expensive legacy systems and build out automated data delivery pipelines into BI platforms.

As part of Phase 1, functionality to stream rig sensor data into the modern data estate was delivered to business users in a production environment. The data was processed, normalized and organized into tables in Delta Lake using Databricks. This functionality removed the need for third-party legacy data engineering tools to structure the raw data. More than 85 TBs of historical and streaming data has already been delivered into the modern data estate.

“Summaries and aggregations that took us hours to run can now be done in minutes,” a data analytics manager at the company said after the engagement hit this milestone.

Blueprint has already kicked off Phase 2 of the project to continue the development of the modern data estate. This phase of the project includes migrating the company’s financial data from an on-premises ERP system to the cloud-based modern data estate. The data will be modeled using Azure SQL and Azure Data Factory. Power BI will be used to produce self-service reports and dashboards.

Future phases of the project focus on continuing to migrate more data, including drilling performance, supply chain and asset management data, into the modern data estate, and making that data more accessible for business intelligence.

“As more data is migrated into their modern data estate, the company will be able to manipulate data together in ways they never could before,” a Blueprint developer said.