At a U.S. Geospatial Intelligence Foundation conference, retired Marine Corps Gen. James E. Cartwright, former Vice Chairman of the Joint Chiefs of Staff, said he would need 2,000 analysts to process the video feeds collected by a single Predator drone fitted with next-generation sensors.

This very real problem begged the question: How might the power of cloud computing be leveraged to scale the work of human analysts?

In partnership with Microsoft, Blueprint Technologies set out to create video analytics technology that could quickly process video to augment the work being done by human analysts. The goal was to integrate these capabilities into the existing intelligence analysis workflow. The result was NASH, a product built on Microsoft Azure that utilizes advanced analytics and machine learning technologies to enable the use of organizations’ video assets to more accurately detect and track objects in motion and extract pattern-based insights. It hit the Azure Marketplace in June 2020.

Quick video analysis and pattern recognition can help prevent terrorist attacks, drive global logistical improvements and decrease threat response time, but analysts perform most of that work manually. Named after the mathematician John Nash (depicted in the movie “A Beautiful Mind”), NASH can be used in any industry where advanced pattern recognition is a decisive advantage but making it available to federal agencies was a clear starting point.

“Having been in the Army, I understand the impact video analytics can have on these federal agencies,” said Blueprint Managing Director of Innovation Gary Nakanelua. “Suboptimal or inefficient solutions have more serious consequences than a hit to the bottom line. As in medicine, people’s lives are impacted by the work these people do every day. I don’t think someone has to have served to appreciate what’s at stake, but having served myself, that appreciation is ingrained in me.”

The U.S. government had been trying to better incorporate video technology into its analysis workflows to help analysts quickly identify patterns and irregularities but had not made much progress. Microsoft turned its efforts to finding a partner who could demonstrate what was truly possible using advanced video analytics on Azure. As a Microsoft Gold Certified Partner Blueprint began working on a video analytics solution to accomplish those goals.

“The agencies wanted something different, but they were unsure what that ‘something’ was,” Gary said. “In my experience, many companies would freak out when handed a request with so many unknowns. But we were excited because we thrive on that level of open possibility.”

Blueprint spent two weeks in April 2020 embedded virtually with a cross-agency team, observing workflows and processes, seeing how they utilized technology and learning the ins and outs of analysts’ workflows, all to understand the problems the analysts faced and how video analytics could improve the overall intelligence analysis processes.

Known for its focus on exploration and experimentation, Blueprint thrives when facing serious problems. A typical agile approach, while ideal on paper, is often unfit for complicated, real-world scenarios. That approach often results in a time-consuming, inflexible development process, resulting in a book of work that may not actually solve the true problem.

Utilizing Blueprint’s Facilitated Innovation methodology, Gary and his team developed three concepts within the first three days of immersion. By shifting from time-boxed activity to delivering value quickly, organizations can focus on the impact of the solution, rather than flashy interfaces or lofty promises.

“Keeping everything raw allows us to pivot through multiple possibilities and quickly validate potential solutions. Often, the optimal solution is one driven by constraints.” Gary said. “In our world, one of the most powerful constraints is time. The longer something takes, the more it costs. When you’re working with the unknown, you need to get to a state of known quickly.”

As Gary and his team studied the inner workings of the federal agency and created concepts, the engineering workstream kicked off. While not all the details were known, there were enough foundational components to get started: The solution needed to be on Azure and had to be capable of processing huge volumes of video data that would be stored and analyzed elsewhere. Parallel work and development streams would accelerate the project’s timeline drastically.

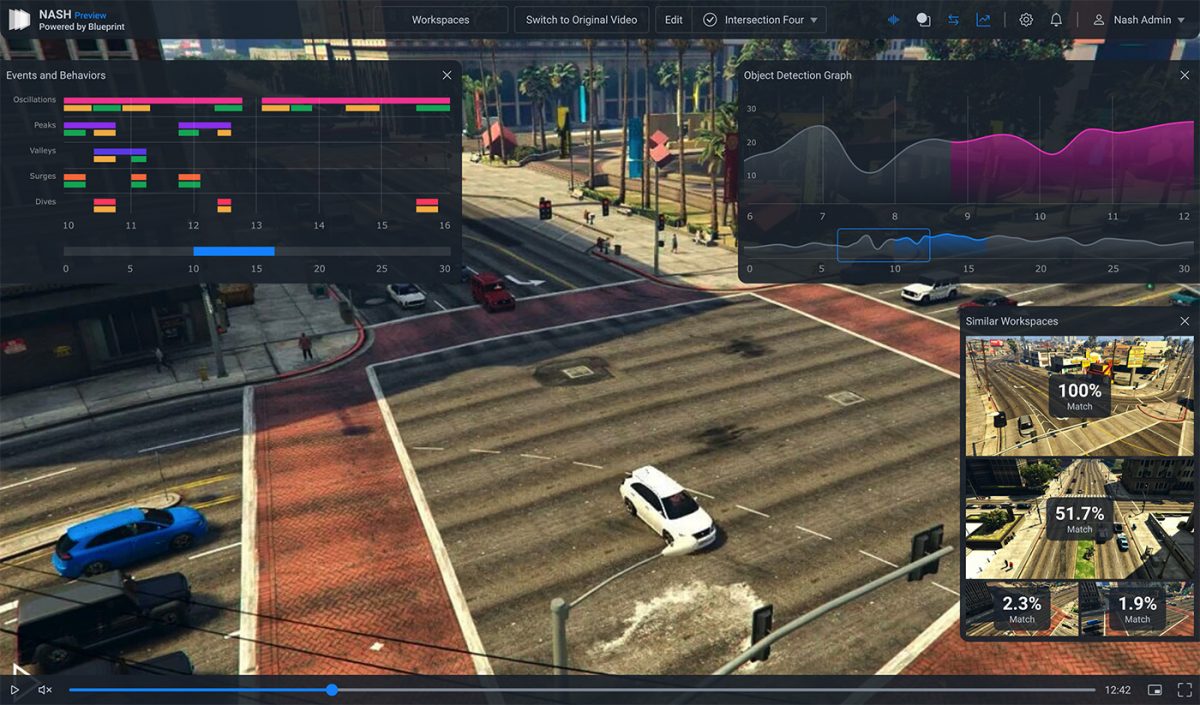

To train pattern recognition, Gary’s team was able to utilize the director mode of Grand Theft Auto V to train the machine learning behind NASH.

Early on, Blueprint’s data scientists faced a lack of the video assets needed to train the machine learning models NASH uses to identify and track objects. The federal agency’s video was classified and inaccessible, so another source of video data was needed. Gary’s team realized that the virtual worlds of video games could hold the key. When developing Grand Theft Auto V, Rockstar North sent multiple research teams throughout Los Angeles and shot over 250,000 images and thousands of hours of video to create Los Santos, the GTA version of Los Angeles. Because the game includes a director mode that allows users to control traffic density, pedestrian population, time of day, weather and camera angle, Gary’s team was able to collect the necessary quantities of raw training footage to begin NASH’s pattern recognition training.

The federal agency recognized the value of video data in pattern recognition and tracking but had been unable to get the processing time fast enough to make its inclusion feasible. The agency didn’t have time to wait hours, days or even weeks for processed video. Decisions had to be made faster than that, meaning that everything was still run through human analysts.

The on-demand scalability of Azure sidesteps the physical infrastructure concerns that normally plague video processing pipelines. If an agency were maintaining its own physical infrastructure and wanted to decrease processing time, it would involve time-consuming physical infrastructure investment. It could take years to get the processing power needed, and by then any opportunity to address an immediate threat would have passed. By default, Nash takes ten minutes to process every one minute of video footage. But because NASH utilizes Azure and Databricks, a user can instantly switch from standard processing on CPUs (the regular processor in a computer) to GPUs (a purpose-built processor built for graphics/video), providing immediate speed gains.

“The backend of NASH is what demonstrates the power of video analytics,” Gary said. “Yes – there is a phenomenal user experience on top of that, but that scalability gives you performance on demand.”

With the launch of NASH, this federal agency was finally able to move toward its goal of faster, better video processing. For them, it meant improved recognition and response times for various areas and activities of interest. In the broader world, organizations in all industries now have the ability to maximize the value of existing video assets.

Want to know more about video analytics and how Blueprint can move you forward into this new technology? Introducing NASH, Blueprint’s Advanced Video Analytics tool.