The Need for UniForm

Organizations make strategic decisions and investments in specific lakehouse table storage formats that best suit their needs. We’ve seen this firsthand with organizations of all sizes across various industries, including retail, insurance, oil & gas, and technology. These decisions, while critical at the time, can later pose challenges as technologies evolve, and new solutions emerge.

One of these challenges is migrating to a different table storage format. Addressing this challenge can be daunting, fraught with risks and complexities. The process involves significant time, resources, and expertise and can disrupt ongoing data operations. Moreover, there’s the risk of data loss or corruption during the migration and the potential of compatibility issues with existing tools and workflows.

Enter Delta Lake’s new feature, UniForm. Recognizing the need for a smoother transition between different storage formats, UniForm unifies these formats, thereby reducing the barriers to adopting new technologies. By adding support for Apache Iceberg and Apache Hudi, UniForm allows organizations to leverage their existing investments in table storage formats while benefiting from the added flexibility and capabilities offered by Delta Lake.

With UniForm, transitioning to new Lakehouse table storage formats no longer needs to be disruptive. Instead, it becomes an opportunity to enhance data operations and drive innovation. It is insanely quick to get started with too.

Testing UniForm

Our initial test focused on AWS and Apache Iceberg, partly because a portion of our customers are intimately familiar with S3. We also tested UniForm on Azure Data Lake Store (ADLS), but we will save that for Part 2.

For those that want to follow along yet may be unfamiliar with an Apache Iceberg lakehouse setup, we recommend checking out Project Nessie and this great notebook from Dremio.

Some may notice that this testing setup leans towards a Snowflake environment. The promise of UniForm is unifying table storage formats because “the rising tide lifts all boats.” We are opinionated but, for the benefit of our customers, maintain a platform-agnostic view of the Lakehouse.

So, with UniForm, that means that data stored in Delta Lake can be accessed (read/write) as if it were natively stored Iceberg. Before we continue, it’s worth noting that if you are a Snowflake customer, support for Apache Iceberg tables must be enabled on your account.

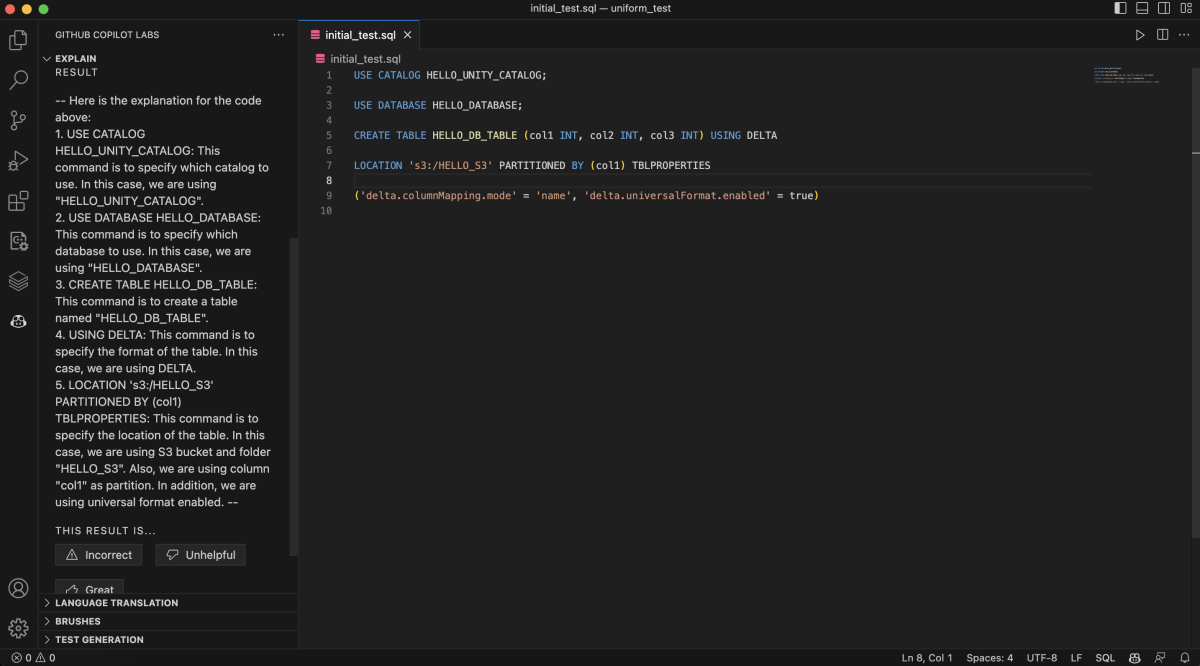

How easy is UniForm to enable? It's simply a property on your table.

(If you’re curious, you can see how Github CoPilot explains this SQL code on the left)

Part 1: Initial Impressions

The Lakehouse architecture promises the best of data warehouse and data lake paradigms. It transcends vendors and unlocks the potential for incredible value from an organization’s data into new data products. For example, our Large Language Model (LLM) Center of Excellence recommends a Lakehouse as a critical first step in a cohesive data and AI strategy. Organizations shouldn’t be punished for something like metadata formats.

As we began exploring UniForm, the simplicity stood out immediately. Unifying different table formats under one umbrella is a game-changer in data lake architectures. The addition of support for Apache Iceberg and Apache Hudi is significant, and it opens up new possibilities for organizations looking to optimize their data operations.

Stay tuned for part 2 where we took a look at UniForm testing in Azure.