As a leader in FinOps, your focus is on managing cloud and data costs effectively while maximizing the business value of your investments. Cost optimization, financial accountability and controlling data workflows is the name of the game. In this blog post, we’ll be presenting typical business problems or questions a FinOps leader would come across and how the Lakehouse Optimizer (LHO) can help address them through discovery, optimize, and operate.

Discover

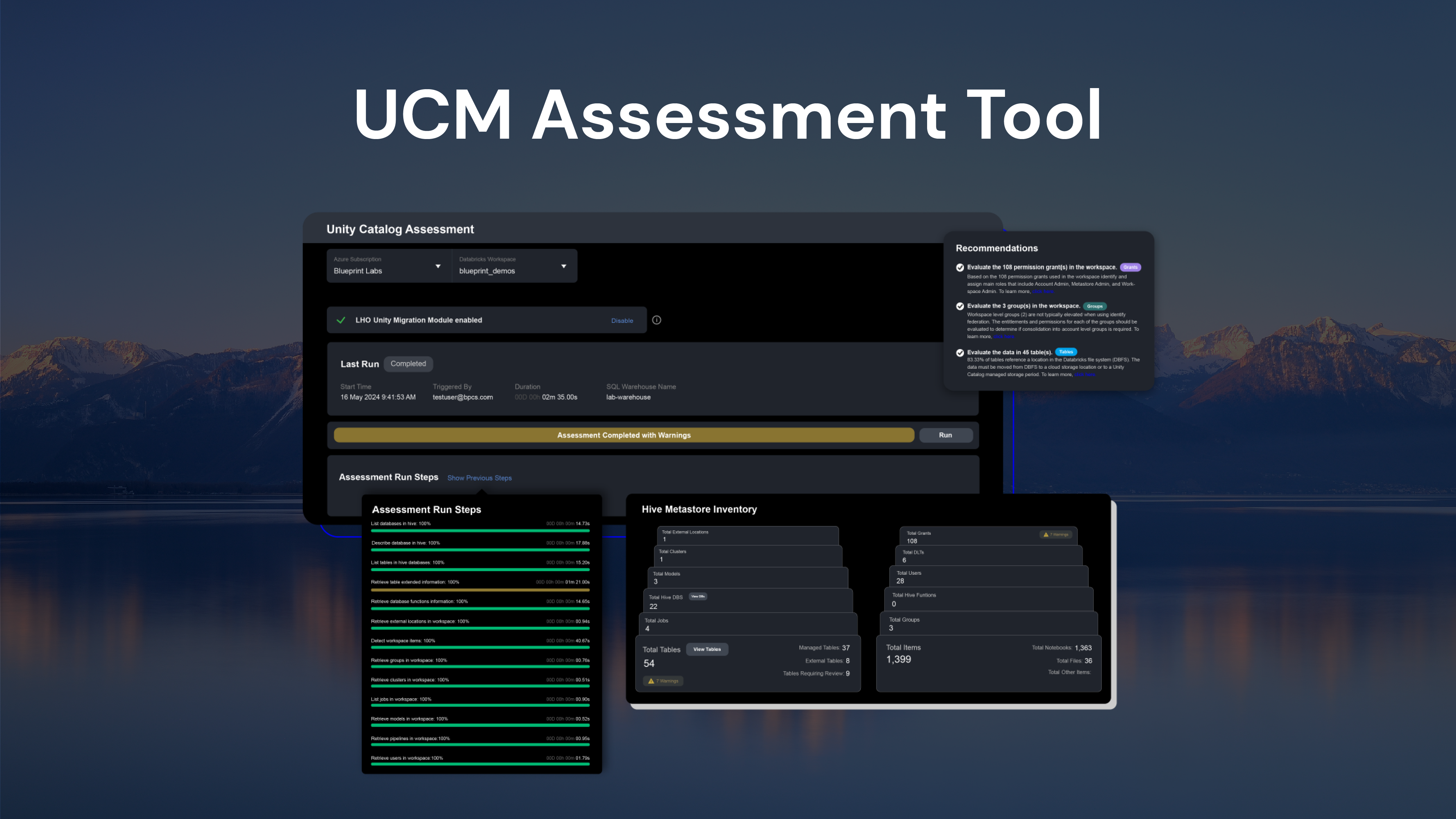

How can I narrow in on the most vital items in my Databricks Lakehouse that need attention?

The Lakehouse Optimizer (LHO) has an incidents engine that operates in near real time. It flags workloads and interactions with 30+ different incident types, generating prescriptive recommendations for action, which are consolidated on the incidents tab. You can group them by owner and establish configurable alerts for different groups of users to drive timely action.

I’m looking for an optimization tool that can easily integrate with my financial management tools.

All the telemetry data collected by LHO is stored in your tenant in an easy-to-access database. We also have complimentary Databricks notebooks to fast track your integration with other systems.

Optimize

I’m having issues rightsizing my commitment to both Databricks and my cloud provider.

The LHO will evaluate the amount of healthy and unhealthy spend and provide a path to optimization so you can forecast and commit with confidence at the right level for both your cloud provider and Databricks.

How can I track, attribute, and charge back granular usage of Databricks across my organization?

The LHO leverages tags to group any combination of Databricks resources and provide a breakdown of cloud provider and Databricks costs. It allows for chargeback based on business units, teams, business processes, projects, use cases, and more.

Is there a way to save cost by consolidating redundant compute and storage?

Yes.

The LHO will detect redundant objects used in workflows and notebooks across the Hive Metastores and Unity Catalog and provide recommendations for consolidations. Enterprises have saved 25% of their bill following these recommendations.

Operate

How do we prioritize optimization within the organization?

The LHO is licensed site–wide so any Databricks user can access the platform. The application is designed to integrate into the daily workflow of data practitioners and provides direct links to knowledge base articles to upskill team members. With configurable alerts and recommendations, the right people can see the right information at the right time. With near real–time incident tracking, data practitioners can stay in their optimization workflow.

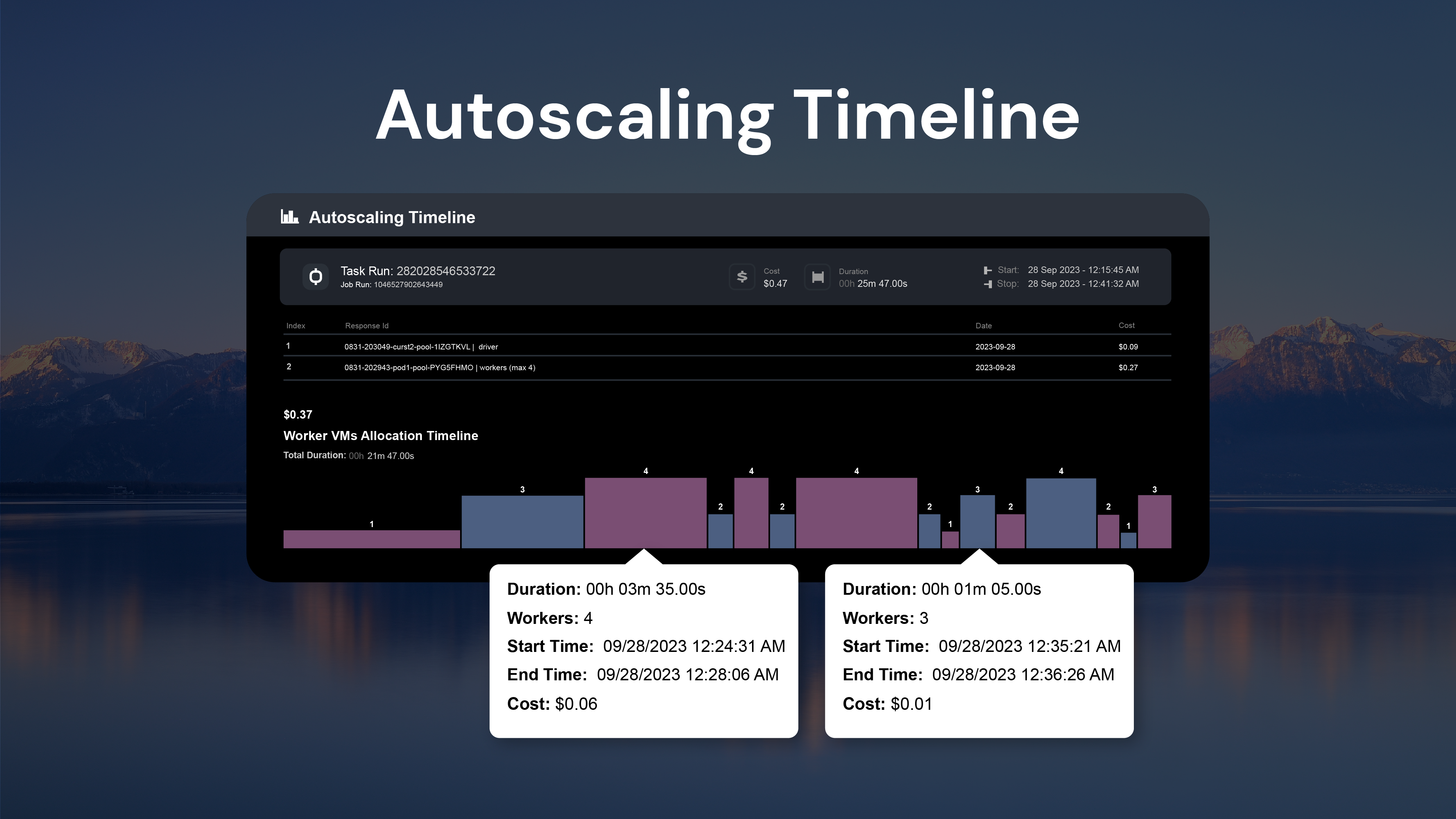

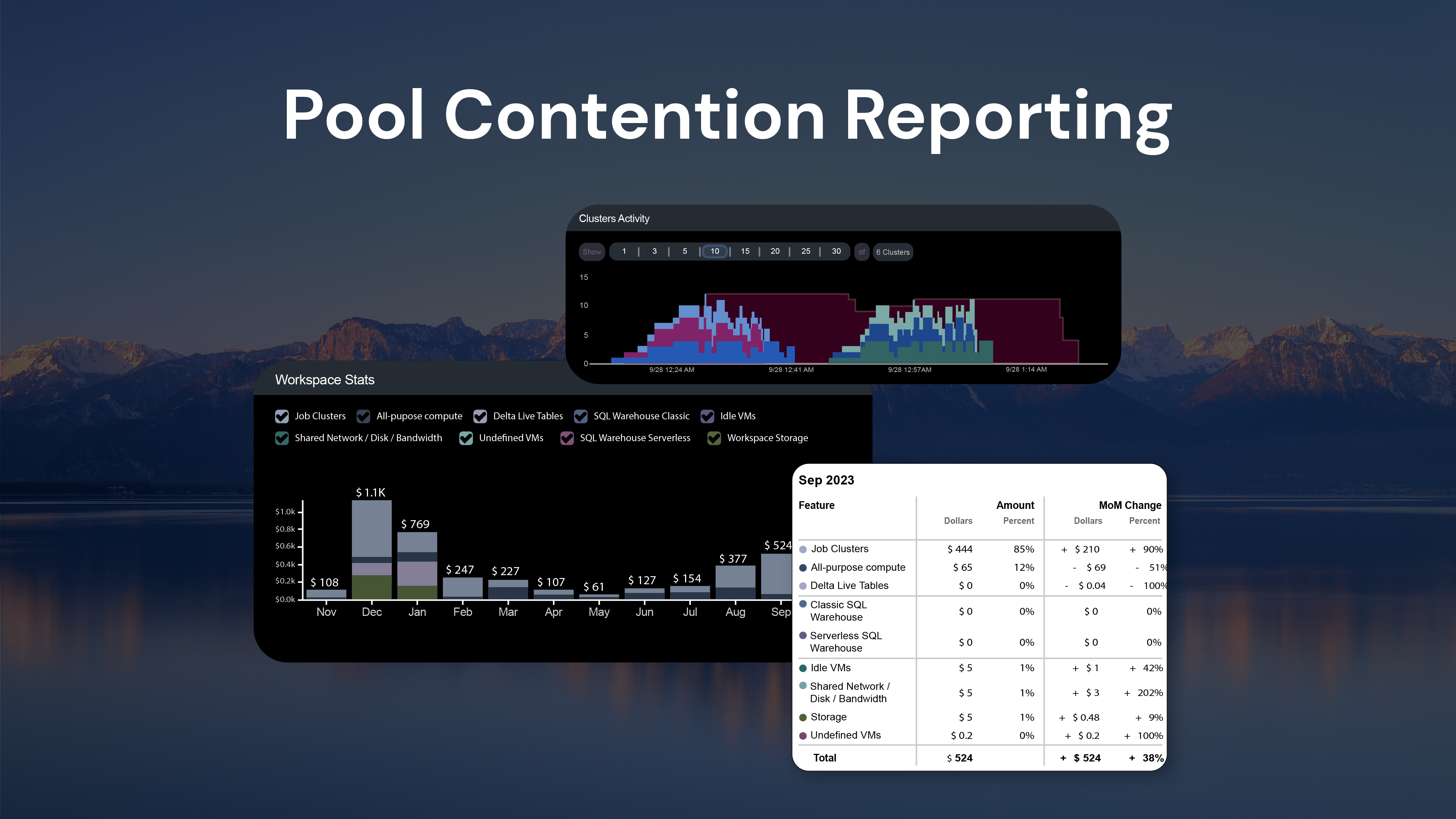

What makes the Lakehouse Optimizer different from other financial management tools that integrate with Databricks?

We like to call it “unparalleled actionability.” The LHO provides prescriptive recommendations at the deepest level of interactions of the Databricks platform, including autoscaling events, nested notebook orchestrations, pool contention insights, compute instant type and size, and optimizations for serverless.

The Lakehouse Optimizer is designed to empower FinOps leaders by providing actionable insights and tailored solutions to optimize Databricks and cloud expenditures.

Whether you’re looking to identify critical areas of attention, seamlessly integrate with financial management tools, right size commitments, or track and charge back usage, the LHO offers unparalleled capabilities. By leveraging its real-time incident tracking, prescriptive recommendations, and comprehensive optimization features, you can drive financial accountability and maximize the value of your data investments.