Switching to the cloud means big opportunities and new capabilities, but there is a frequent argument that it is just too expensive. The reality is that it can be difficult to monitor spend when operating in the cloud. But this visibility problem has led some companies to underestimate the potential ROI of cloud data efforts and thus underinvest in them, giving competitors a pronounced advantage.

Companies have long been accustomed to fixed-cost structures for their servers, applications and storage arrays, consisting of one-time procurement and setup costs and annual maintenance fees. Cloud subscriptions, on the other hand, are based on a company’s storage and compute consumption. Unforeseen cloud usage costs are a serious issue – so much so that companies have launched solutions to help businesses keep a better eye on cloud consumption. But knowing how much you’re spending won’t make it efficient – that requires cloud optimization.

Working with a partner to clean up a messy cloud operation can immediately stop unnecessary spending and create room in the budget for other essential investments, such as modernizing your data platform to allow streaming data, which enables real-time insights to drive smarter business decisions. Or you could launch or expand a data science initiative that enables machine learning to predict inventory requirements in a store or needed maintenance on field equipment.

Being used by the cloud rather than using the cloud.

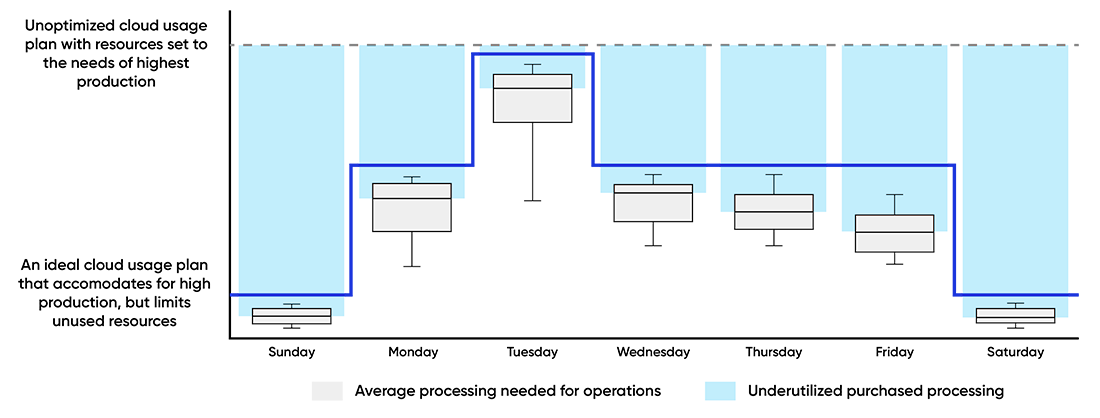

The cloud allows users to turn every dial up and down as needed. But a common problem we see involves companies operating in an oversized environment. For the sake of development speed, companies often bump up a resource to its premium level or size to meet unforeseen spikes in demand and ensure continued business operations. But, when a project is complete, the resource is not adjusted down to the new appropriate level. If a company only needs to process a large workload once a day, but all other times it requires significantly less computing power, that processing power does not always need to be running. One of the cloud’s benefits is its elasticity. Companies need to choose and adjust resources based on their workloads, so they don’t pay for unutilized or underutilized resources.

Another common problem we encounter occurs when companies leave unused environments running unnecessary workloads. In development, there are often testing and QA environments. But, once a project has been moved to production, those test environments don’t need to be kept on, but they often are. These oversights waste money and inflate the cost of the efforts, hurting ROI.

Continually hemorrhaging cash due to a lack of transparency is, of course, not the answer. A better solution is to work with a partner to identify and resolve messy cloud operations. These efforts often pay for themselves and create room in the budget for other necessary expenditures.

Instant savings

We recently worked with a cloud management company on cost containment and optimization. While documenting its architecture, we found two sizeable virtual machine clusters that were rarely used but had never been turned off. One of the clusters, for example, served Power Bi dashboards, and it was kept running to be ready for any request that might come in. But, as with most cloud resources, those clusters don’t need to be left running. They can be spun up on demand. By optimizing system monitoring and taking advantage of the cloud’s inherent flexibility, we reduced their cloud costs by $100,000 in less than three weeks.

We’re also analyzing the pressure each cluster feels when running and have already found multiple instances of oversizing. We are in the process of pulling together recommendations and calculating the cost savings of reducing cluster sizes. Unfortunately, uncovering inefficiencies can be difficult because cloud providers typically don’t make it easy to break down costs by data product or asset. But a partner with the right development, architecture and data science chops can quickly evaluate and optimize any environment.

Its about more than money

Suboptimal cloud operations lead to other serious issues, from security to business resilience. While analyzing the cloud management company’s architecture, we found that one of its vital production clusters was on the verge of crashing due to a memory leak and would have crashed within a week. The company had no idea. We informed the company of the problem, developed a solution and provided some training to keep it from happening again. The benefits of continual cloud optimization extend well beyond cost management.

The only way to be sure you are optimizing your cloud operations for cost and performance is to analyze them continually and creatively. The fastest, most efficient way to do that is to work with a partner who has mastered the process. Let’s start a conversation about how Blueprint can help you keep your cloud operations running smoothly and free up resources for new opportunities and technologies.

Performance optimization for Databricks

Optimize code and infrastructure to maximize your run speed up to 100x and reduce your cloud spend by 50% on any cloud platform