The problem

A renewable energy company focused on solar, wind and energy storage was experiencing rapid growth and planned to continue that expansion via both acquisitions and the construction of new facilities. Facing aggressive goals, company leadership recognized the need to establish a new data infrastructure to meet both current and future needs.

The company’s financial, production, land and construction data were all housed in different, unconnected systems. Third-party systems were also utilized to deliver additional information, including geologic data. Often employees worked without direct access to much of the data, resulting in hours spent manually extracting and transforming data to produce bare-bones reports.

One high-priority problem involved the construction and financial data of the company. Put simply, they weren’t connected directly. The financial data was housed in NetSuite while the construction data was dispersed among dozens of rudimentary spreadsheets. Leadership and management lacked visibility into the budget, spend and invoice data, making project management extremely difficult and accurate forecasting impossible. That lack of trustworthy, actionable data resulted in further time wasted on repeatedly validating results before reports could be made.

“Parcels could have multiple legal names,” one employee said. “That could double or triple the acreage reported.”

These manual processes would become increasingly unsustainable at the rate the company hoped to grow.

The Blueprint Way

This engagement began with a 3-week assessment to understand the company’s existing software and data infrastructure in order to identify the most significant problems and roadblocks to smart growth.

Establishing a fully modern data estate is a long-term project with significant future ramifications, so it is essential to plan well and identify those significant problems that can be solved along the way.

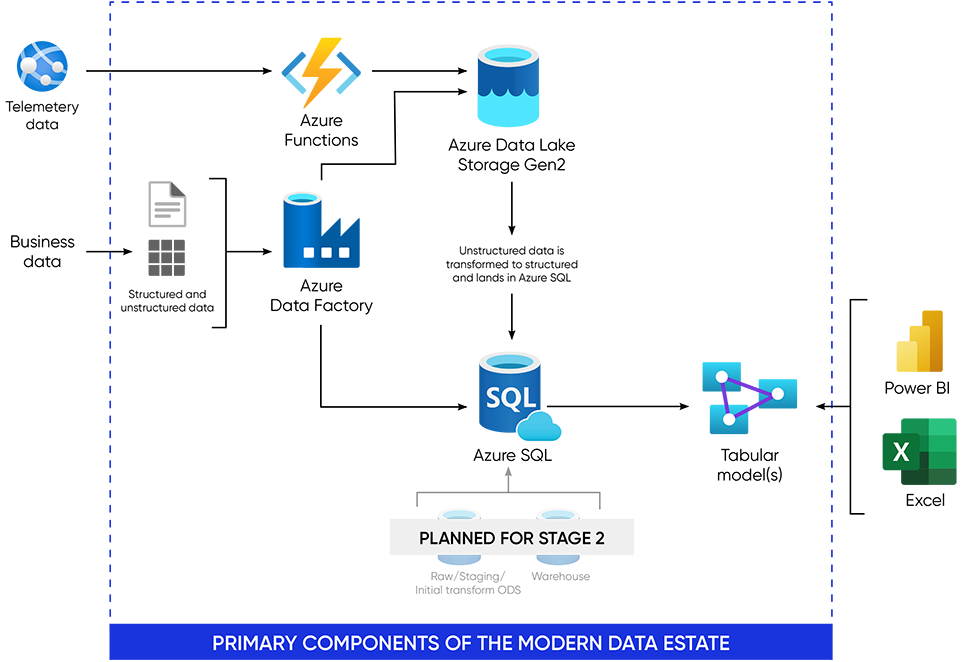

Throughout the 6-month project, Blueprint used Azure Functions and Azure Data Factory to pipe disparate data from multiple different systems into an Azure data lake.

Creating quarterly and yearly financial reporting for senior management had been a time-consuming, manual process involving CSV files and pivot tables. By using Azure Data Factory to replicate and retrieve financial data from NetSuite and land them in an Azure SQL database, updated daily, the company could easily connect to Power BI to create timely financial dashboards.

But we went far beyond that. A project-specific spreadsheet containing telemetry data on wind speeds, energy delivered, energy billed and more could not be connected to NetSuite or PowerFactors, the company’s two core software systems. By migrating the spreadsheet to a SharePoint List, which also enforces better data quality, Blueprint connected all the data and used Power BI to create dashboards that displays aggregated project information for multiple levels of decision makers.

For the first time, the finance department has a clear picture of accounts payable and receivable and invoices. The operations team can also now see precisely how much has been spent on each project and what’s remaining in the budget.

For teams that use third-party data, including the geology team, Blueprint automated the data retrieval process and the creation of CSV file typically used for analysis. Blueprint also piped this data into the data lake for any future analysis.

The next stage of this engagement involves establishing a data warehouse, enabling the client to derive more insights from their data. The warehouse will open the door to additional data science and analysis, solving for problems beyond the day-to-day and allowing for proactive decisions. The data warehouse will further allow for easier ingestion of incoming data, unlocking the use of machine learning and AI to quickly identify trends and increase competitive advantage.