When Blueprint Technologies’ data science team set out to optimize a travel and hospitality company’s online advertising spend with machine learning, we found an almost uncountable number of ways the problem could be approached.

This company was spending billions annually on online advertising, but its spending on hotel ads on metasearch platforms was not producing the desired return . It wanted to reduce its spending on bleeding segments and allocate more money to profitable segments. To do that, the company needed to optimize and predict the return on advertising spend for hotels appearing in metasearch results – a problem Blueprint is perfectly positioned to solve.

From the data science perspective, this was a problem that needed to be broken down into two parts: optimization and prediction . Blueprint’s data science team dove right in to quickly deliver meaningful and useful insights to the client.

Optimization

The main purpose of metasearch is to aggregate results and present users with the best options available. By bidding for ad space on metasearch sites, a company drives visitors to their site with the hope that the user will purchase something – in this case, a hotel.

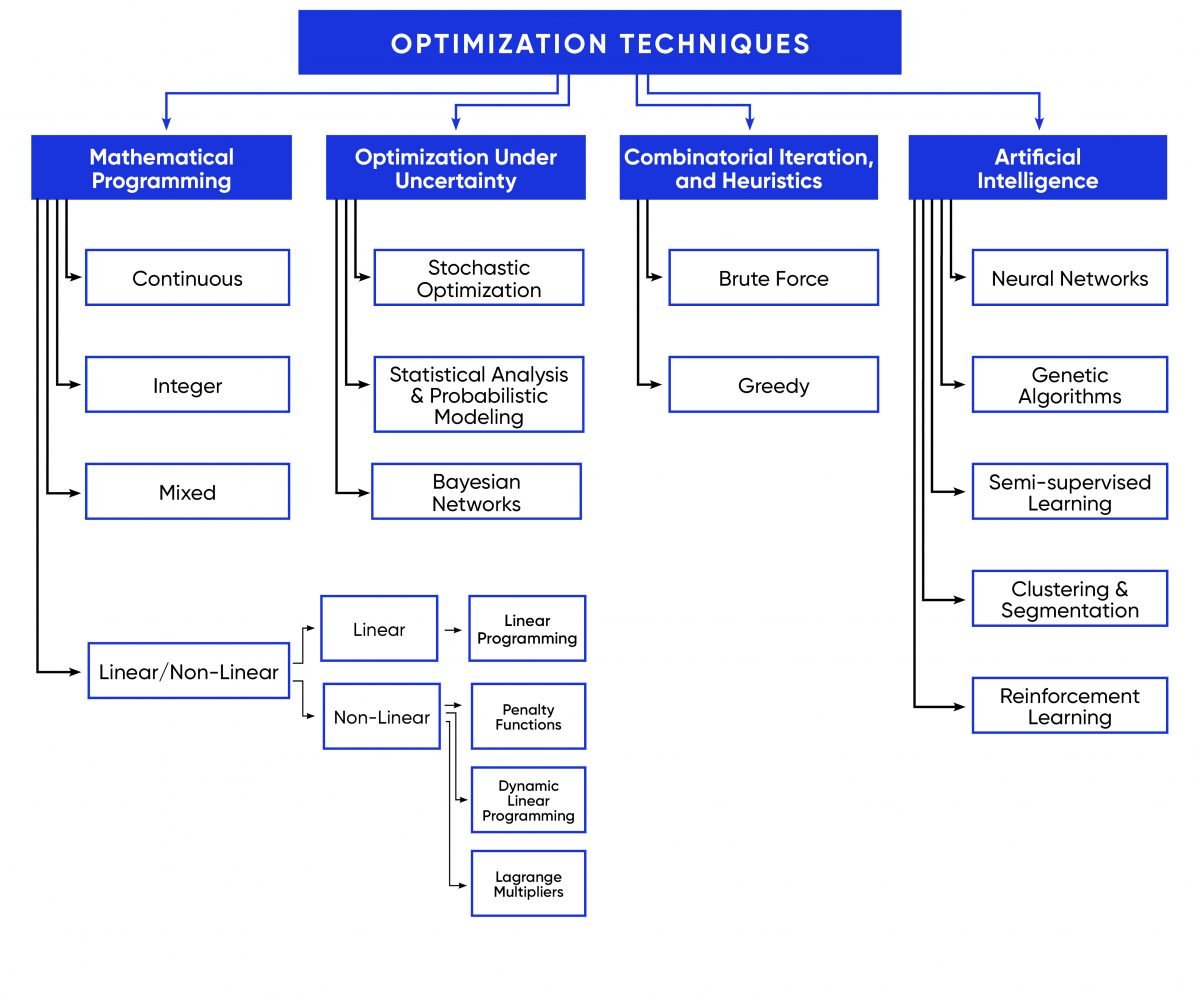

Connecting offerings and customer behaviors, trends and patterns to drive a successful marketing campaign can be extremely challenging, leaving many companies in a state of “paralysis by analysis.” There are thousands of data points and hundreds of statistical and machine learning models to choose from when trying to optimize an advertising campaign, a few of which are shown in the chart below.

For the optimization portion of this engagement, we chose dynamic linear programming because we needed to be able to tell our model exactly what we needed it to do: maximize the company’s profit based on the spend for a hotel ad and the placement of that hotel ad within a metasearch platform. That involves complicated math that needs precise inputs and should not be dependent on manual, human efforts that are often error-prone.

The alternatives to dynamic linear programming considered for the optimization layer were black-box optimization, neural networks and logistic regression.

Neural network

Using a neural network, which can be appropriate for many efforts, is all about teaching the model what it needs to do without specifics. People will often throw a huge neural network at a problem, but training and tuning often take days, can be expensive and the results are often lacking, making that approach unsuitable to this project.

Black-box optimization

Search engines and platforms like Google, Amazon or Facebook provide rich datasets and many features to help automate online advertising and metasearch ranking, with various “black-box” programmable options, such as setting a heuristic, or rule-based, bidding strategy. There are also various pre-trained machine learning and artificial intelligence methods available that are presented to the user as a “rule,” such as “revenue is higher and there are more clicks on Fridays,” “customers tend to pay more for international travel than for domestic travel” or “people tend to invest more on a trip for a honeymoon or an anniversary.” The problem with this type of tool, however, is that it suffers from low generalization. Generalization is the ability of a model to adapt as new data is piped in. Simply put, the black-box options often work well in testing, but they are not intelligent enough to figure out anything beyond the scope of their training.

Logistic regression

Logistic regression is a simple and powerful classification algorithm. If all we had to do was to decide if the company should place an ad or if a customer is likely to click the ad, logistic regression could have worked. But because our goal went well beyond those simple algorithms, we chose another method.

Dynamic linear programming

Linear programming is a simple, yet powerful mathematical optimization technique used to solve both straightforward and complex problems. Linear programming does require some additional work to understand the key decision variables, build a high-level understanding of the key metrics and drivers involved, define a custom objective function and find a suitable model for the (potentially non-linear) constraints or restrictions.

Linear programming does have a well-known problem: its linearity. A linear model cannot adapt fast enough to follow the emergent patterns and trends that change every day. We tackled the metasearch platform process as if it was a very complex dynamic physical system, using concepts and techniques borrowed from physics, thermodynamics, control theory, statistical analysis, and machine learning, creating a dynamic linear optimization solution. One of those techniques was the game theory concept of Nash equilibrium, made popular in the 2001 movie “A Beautiful Mind.” Another was Lyapunov stability, which helps find the stable and unstable states of a dynamic system.

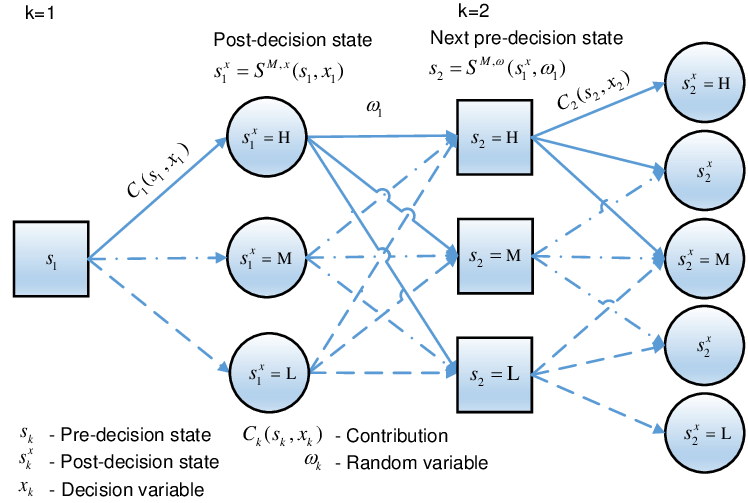

This is an example of dynamic linear programming as a multi-stage stochastic dynamical process, which we used to calculate a customer’s decision processes. Source: ResourceGate

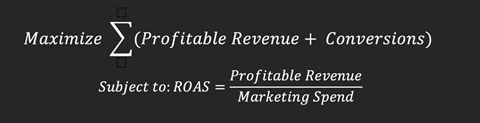

In using dynamic linear programming, we made two assumptions: that the bidding platform was operating in a stable environment and that the key business goal was to maximize the return in advertising spend in the short and the long term. These assumptions allowed us to use a variation of a classical optimization function to maximize the company’s profit based on hotel’s purchased through metasearch.

Classical optimization formula

Once we were able to optimize the return on investment with our assumption that the bidding platform was operating in a stable environment, we did establish a stopgap that made the model more conservative and even stopped the company from bidding for ad space if our assumption was no longer true and the metasearch platform suddenly became highly volatile, like in a sudden market-shift. In that case, our predictions for return on investment would no longer be accurate, which could quickly lose the company money.

Prediction

Once Blueprint chose the dynamic linear programming path, we then had to find a suitable way to model expected customer behavior. So many variables go into a customer choosing what hotel to book, and it was our mission to predict how those decisions would play into the bidding strategy and impact the client’s bottom line.

To predict return on advertising spend in metasearch platforms with multiple brands, bidding agents and hotels is highly challenging because there are millions of transactions and customers every day and thousands of events or reasons preferences can change. In many ways, predicting results of advertising spend on metasearch platforms can be compared to stock market prediction, with long periods of relative stability followed by intense periods of high volatility. You have a millisecond to pique the interest of a potential buyer – it’s crucial that they see the right ad at the right time.

To most accurately predict the client’s return on advertising spend, we realized we needed to predict five different things at once:

- Click-through rate

- Whether a hotel will display in a search

- Probability of conversion

- Cost-per-click

- Hotel profitability

We knew that any ML or AI model we developed must not only generalize well but also adapt and respond quickly to market changes and trends. In the end, we chose gradient boosting, but some other techniques we explored were linear regressors, random forest, neural networks, generalized linear models and Gaussian mixture models.

Linear regressors

For a long time, a popular saying in the data science world was, “All you need to know is linear regression.” And, for many problems that is a good starting point. A linear model is simple and with good approximation it can capture a very relevant part of a function that you’re trying to approximate. However, even though linear regression is quick to train and relatively easy to get in production, it would have been very difficult to maintain due to the large amounts and different types of data, and it would have been difficult to keep accuracy high over time.

Random forest

Random forest and gradient boosting are similar techniques because they are both ensemble learning techniques that use decision trees. But we could not find a good, distributed version of random forest that could run in the version of Spark our client had at that time, which would have made it a more difficult solution to deploy. Random forests are also known to be less resilient to covariate shift, can sometimes take more time to converge and results can be less accurate than those provided by gradient boosting.

Neural network

It is well documented that a single layer perceptron like a neural network is not able to capture the dynamic and non-stationary nature of online metasearch. So, we knew we needed to build at least a multi-layer perceptron to account for all the factors involved in the purchase decision. Modern deep learning models have millions of parameters that can be adjusted to produce significant results in any industry, and they have a place in metasearch bidding. But, for this project, building the model this way would take a lot of computing power and time to fine tune and train and we couldn’t be positive the results would be worth it.

Generalized Linear Model

Generalized linear models unify various statistical models such as linear regression and logistic regression through the specification of a model family and link function. They are widely used in modeling, inference and prediction, and they have the advantage of being available off-the-shelf. In general, GLMs are fairly easy to train, but they can have a tendency to over- or under-predict. We suspected that the blaring and chaotic nature of the online bidding process would not work well with this technique.

Gaussian mixture models

A Gaussian mixture model is a probabilistic model that assumes each data point is drawn from a separate Gaussian or normal distribution. We found that the Gaussian mixture model would not work well for this project due to the extreme values and sparse data common in metasearch platforms. Those factors can quickly bias a model, making the results unreliable.

Gradient boosting

Usability, scalability, performance, required training time, accuracy and precision all play a role in choosing the best algorithm or model for a task. At Blueprint, our goal is to build a solution that provides the best results, removes the most pain points, trains quickly, is easier to use and maintains long term results. Gradient boosting was that solution.

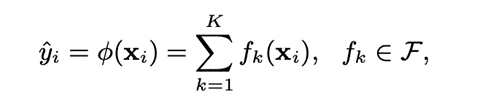

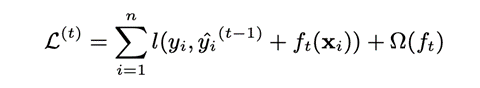

Gradient boosting formula

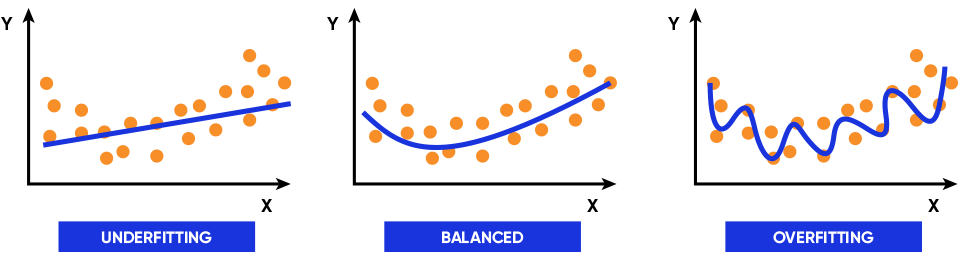

Gradient boosting is an extremely popular machine learning technique based on iterative functional gradient descent algorithms. Like the random forest technique, it builds an ensemble of “weak” learners, usually decision trees, which optimizes a cost function by iteratively choosing the best representation of the training data every time. The term “boosting” is used as an indication that the next iteration will expressly reduce the residual and directly plug in a new estimate that minimizes the gradient or rate of change of the cost function. This is good news in terms of speed of training and accuracy improvement, and not particularly good news in terms of potential overfit and potential lack of generalization.

Overfitting

To predict anything, you need data. To make five predictions that fed into a bigger prediction that would inform decisions for this client we needed a massive amount of historical data. Luckily, this client had years of it. But, with that quantity of data came a steep challenge: overfitting. Overfitting refers to a model learning the noise and fluctuations of training data so well that it negatively impacts the performance of the model on new data.

With this formula, we were able to correct for any potential overfitting. The omega (Ω) regularizes the model.

While gradient boosting can have a tendency toward overfitting, our data science team found it better to fine-tune gradient boosting regularization techniques available in open-source libraries than to use another option. For example, we added L1 and L2 regularization, early-stop regularization, controlled the weight and depth of each gradient boosting step, and used various hyperparameters to make each of the predictions more reliable. Additionally, our data science team trained our models daily rather than weekly or monthly to ensure our team captured short-term emergent trends and avoided overfitting.

Results

At the end of this 18-month engagement, we delivered five models and one algorithm that worked as a synchronized whole, receiving and returning continuous information streams to the company’s data ecosystem. Armed with optimization and prediction capabilities built with advanced data science techniques, the travel and hospitality company nearly eliminated its wasteful metasearch spend, maximizing its return on advertising spend. In the first three weeks of using these models, the company saw a 26% improvement in efficiency, resulting in considerable increases in company-wide revenue and profit and returning a quick ROI.

But we didn’t stop there. Because, as data scientists, we like to make sure we made all the right decisions, we built the other five prediction models outlined above as well seven others. We ran a series of A/B experiments and tests to make sure our original models performed the best for the client. While the gradient boosting solution did outperform all other models in all areas, we did move some of these other models into production to complement the original 5 models.

At Blueprint, the experience and expertise of our diverse team of data scientists is part of what makes us so special. We work fast and efficiently to find the best way to solve critical problems across many industries– and we always check our assumptions. We are problem solvers at heart. Let’s start a conversation about the business challenges you face today.