DATABRICKS CENTER OF EXCELLENCE

Hadoop-to-Databricks Migration QuickStart

Benefits

- Accelerate high-value analytics initiatives and gain experience with Databricks.

- Get up and running on Databricks in an accelerated timeline.

- Complete one (1) Hadoop workload migration and analyze with SQL analytics and BI tools.

- Blueprint accelerators speed time to value and actionable insights.

QuickStart Overview

Introduce

a data team to analytics on Databricks

Prepare

Databricks & data services, non-prod env

Complete

1 Hadoop workload migration

Analyze

with SQL Analytics and BI tools

Case Study

Major U.S. oil drilling company

Challenge

- Siloed data in disparate systems and Hadoop environment — not highly performant

- Drill bit sensors collected data every second, but delivered to data scientists only once every 24 hours (not quick enough for BI to inform decisions)

- Timing delays in data availability resulted in slow response times and drilling adjustments (inefficient)

Solution

- Stream siloed data (rig, sensor, oil sample, financial, HR, marketing) into Azure Databricks Data Lake

- Process, normalize, and organize data into tables

- Remove need for third party legacy engineering tools to structure raw data

- Data modeling via Azure SQL & Azure Data Factory

- Share data to Power BI for real-time analytics, dashboards, and reports

Impact

~100TB

Data migrated to Data Lake

80%

Increase in rig state data processing

(from 24 to every 4 hours)

95%

increase in speed of OFT data processing

(45 days of OFT data processed in 1 hour instead of 24 hours)

Real-time drilling data available in 1 second!

Quickstart timeline

Stage 1

Lakehouse 101

- Data acquisition

- Simple data transformations

- Organizing data

- Security

- BI, reporting

- Optimizing costs & management

Stage 2-3

Up & running

- Implementation → Powered by Infra-as-Code

- Security Config → Blueprint security rapid config

- Lakehouse optimization → Blueprint Lakehouse Optimizer

Stage 4-8

Data pipelines

- Identify data sources

- Historical & current data

- Data transformations

- Data quality

- Data set creation, scheduled

- Tables in the Lakehouse, ready!

Stage 8-10

BI & analysis, optimize, & roadmap

- Power BI or Tableau

- DBSQL for ad hoc analysis

- Dashboards & reports

- Utilization management

- Roadmap the future

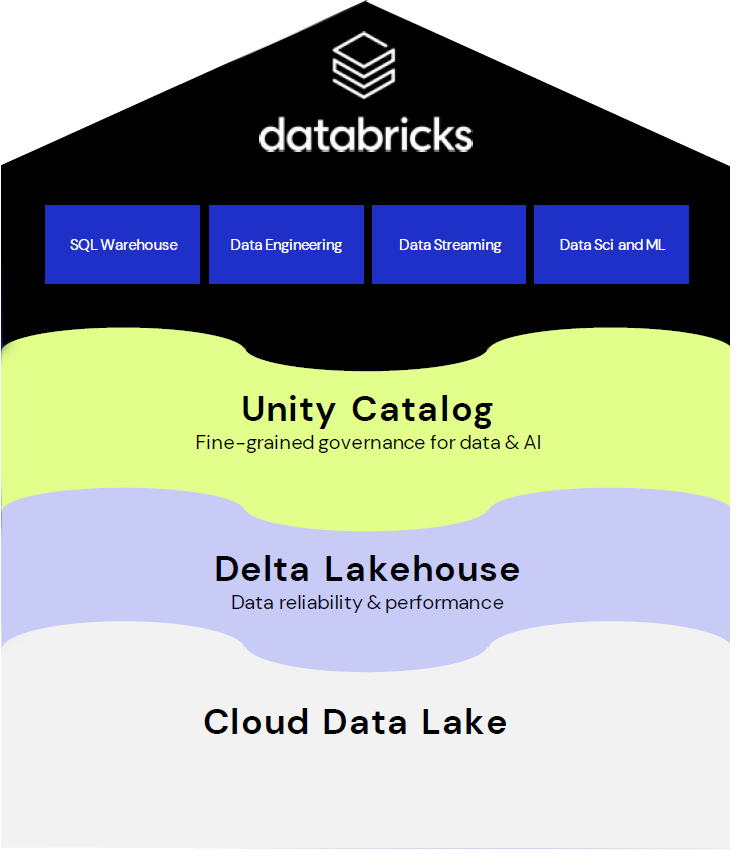

Deployment journey

AWS or Azure readiness

- Account with create-rights

- Terraform installed

QuickStart data sources

- Cloud accessible services identified

- Read-only account

Scripted build

- Resource / Admin groups

- Storage, Databricks workspace, clusters

- Unity Catalog / metastore / permissions

- Blueprint Lakehouse Optimizer

Sample data & notebooks

- Samples deployed

- 3 notebooks deployed

Workflows active

- Enable job/workflows, scheduled

Databricks is LIVE!

- SQL analysis workshop

- Power BI / Tableau

- Engineering & workflow demos

Lakehouse Optimized

- Monitor jobs

- Understand costs

- Identify orphaned workloads

Deliverables

Build a net-new use case and validate

cost vs performance and usability of Databricks platform.

- TCO and performance projection report

- Established Databricks Lakehouse environment

- Data ingestion pipeline

- Platform utilization monitoring app

- Working end-to-end use case/business process

deployed to non-production environment - Results report and demonstration