The world of generative AI continues to evolve, becoming ever more accessible for people from all areas of an organization. The latest milestone in this journey is the English SDK for Apache Spark, also known as PySpark-AI, that Databricks announced at the 2023 Data + AI Summit. This new SDK is not just a Python library; it’s a different way to approach data analysis. It seeks to break down barriers and simplify the learning curve by translating English instructions into PySpark and SQL code, powered by generative AI. Let’s dive in and explore what it has to offer.

The Vision

Databricks, the force behind this innovation, started with the simple vision of using English as a programming language. They wanted to leverage generative AI to compile English instructions into PySpark and SQL code, democratizing programming to a broader audience. This vision led to the creation of PySpark-AI, an extension of Apache Spark that aims to make this project even more accessible and successful. You can read more about the announcement or check out the project’s GitHub repository.

The PySpark-AI project ships with a few impressive examples that leverage GPT-4 as the default model. Intrigued by this, we decided to experiment with an older model, GPT-3.5 Turbo, despite the team’s recommendation against it because GPT-3.5 Turbo missed their quality expectations. You can read more about this in their discussion.

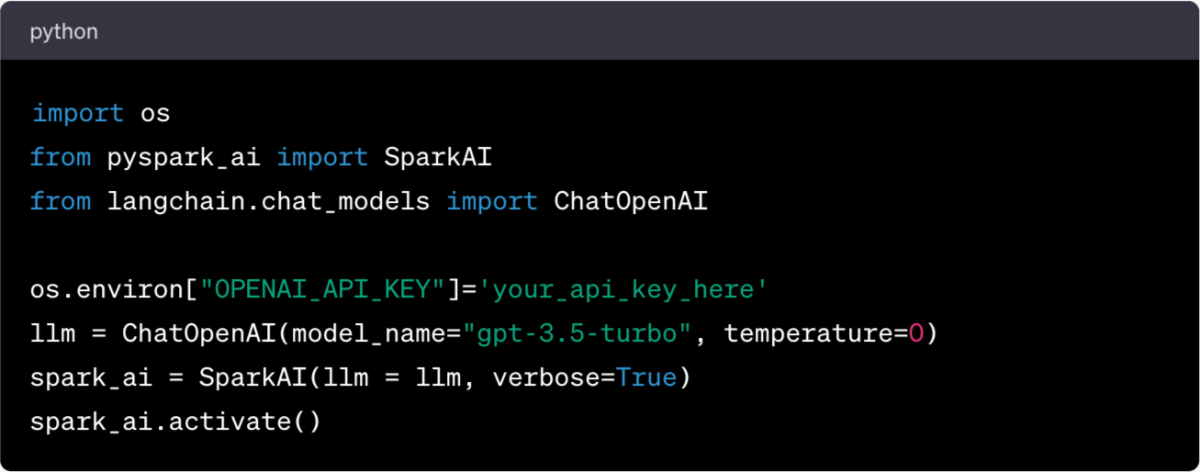

Before we dive into our exploration, it's important to note that you'll need an OpenAI API key to use this notebook.

Getting Started

Installing the PySpark-AI package is straightforward, especially if you’re working in an environment like Databricks that comes with an ML runtime. If you’re using a different environment, you may need to install the standard PySpark library and PySpark-AI libraries.

Once the installation is complete, we define the model and API key. Although the SDK defaults to GPT-4 and abstracts away the need to explicitly define a language model, it does allow you to define an alternative model, which is what we’re doing here. In our case, we’re using GPT-3.5 Turbo.

Creating a DataFrame from a Webpage

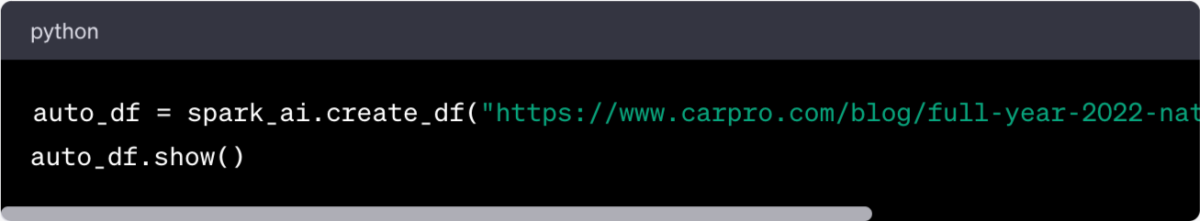

In our exploration, we will scrape a website for automobile sales data from 2022. The following code creates a DataFrame from the data found on the webpage:

This simple block of code does all the heavy lifting and gives us a DataFrame to analyze and visualize.

Making Sense of the Data: Explaining and Visualizing Data with PySpark-AI

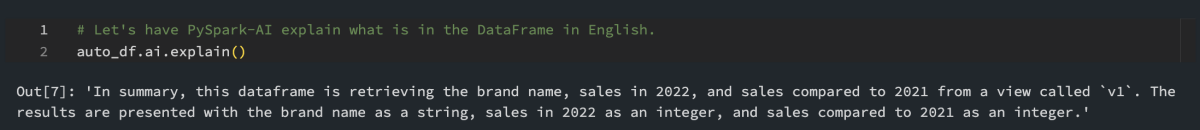

With the DataFrame in place, the fun begins. The English SDK’s integration with Generative AI allows it to explain the data contained within the DataFrame in plain English. This feature enhances the understandability of the data and makes it easier for non-technical individuals to glean insights from it.

Here's how you can get PySpark-AI to explain your DataFrame:

But the real magic of the English SDK starts to shine through when we ask it to plot our results. The SDK generates a prompt that triggers the language model to provide Python code visualizing the DataFrame’s results.

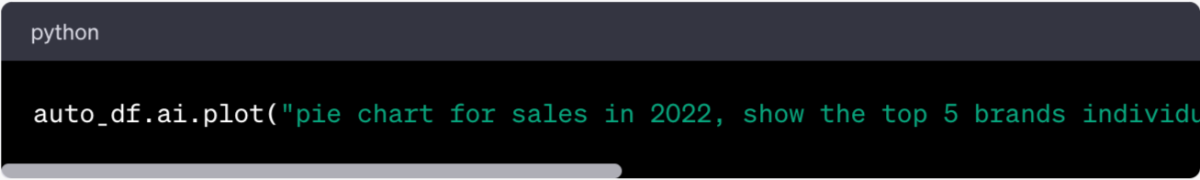

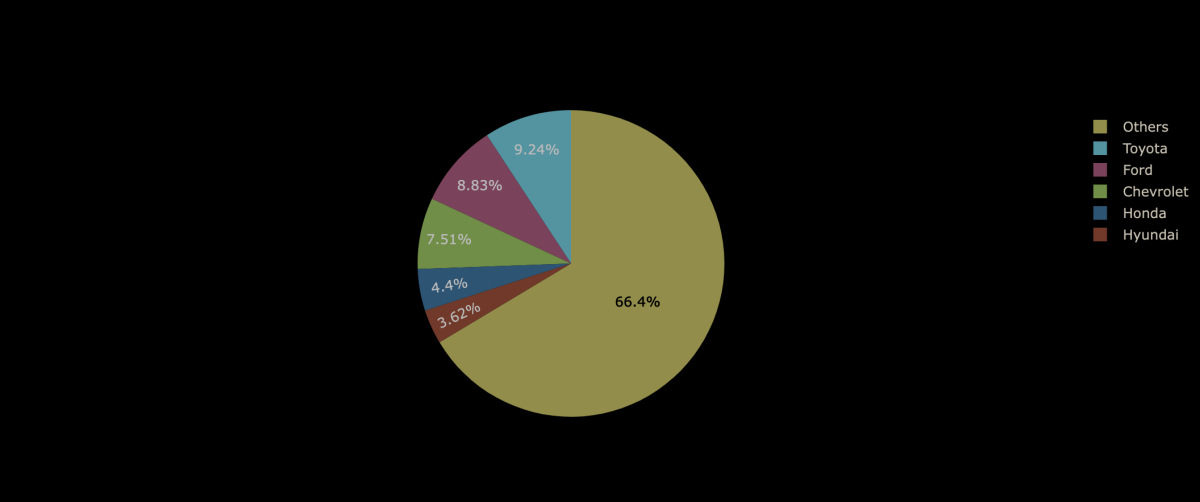

For example, we can ask for a pie chart that shows sales in 2022 for the top five brands and the sum of all the other brands:

With this command, PySpark-AI crafts the appropriate Python code to generate the pie chart and then executes it, providing us with a visually appealing and intuitive representation of the data.

Conclusion

The English SDK for Apache Spark introduces a different approach to data analysis. Translating English instructions into PySpark and SQL code lowers the barriers to programming and makes data analysis accessible to a broader audience. Whether you’re a seasoned business intelligence engineer, an inquisitive technologist, or a beginner, the English SDK for Apache Spark simplifies extracting insights from data.