The problem

A global leader in the entertainment and restaurant industry had one key data goal: Know the customer to develop the best relationship possible and optimize their experience at all levels. The company recognized the value their own data held but was unable to effectively realize it due to their siloed and outdated infrastructure and the immense volume of data they had. The company wanted to create customer profiles based on three main data sources:

- Point-of-sale data from a 15Tb SQL server that was never tuned for big-data workloads. Each transaction created a new row in the database. This resulted in 375 million rows of data, which made it effectively unqueryable.

- Transaction data from mobile devices being stored in a Postgres database in AWS and Google Analytics.

- Streaming customer experience data from in-store Wi-Fi and the corporate website. This holds important demographic data and organizes data into individual customer profiles.

“They were operating as if it were the early ‘90s as far as their IT department was concerned. Everything was a SQL database. Everything was siloed. Every piece of data was in a different database,” a Blueprint business development director said. “Every time they wanted to integrate data from these different siloes, it was a big project.”

The Blueprint Way

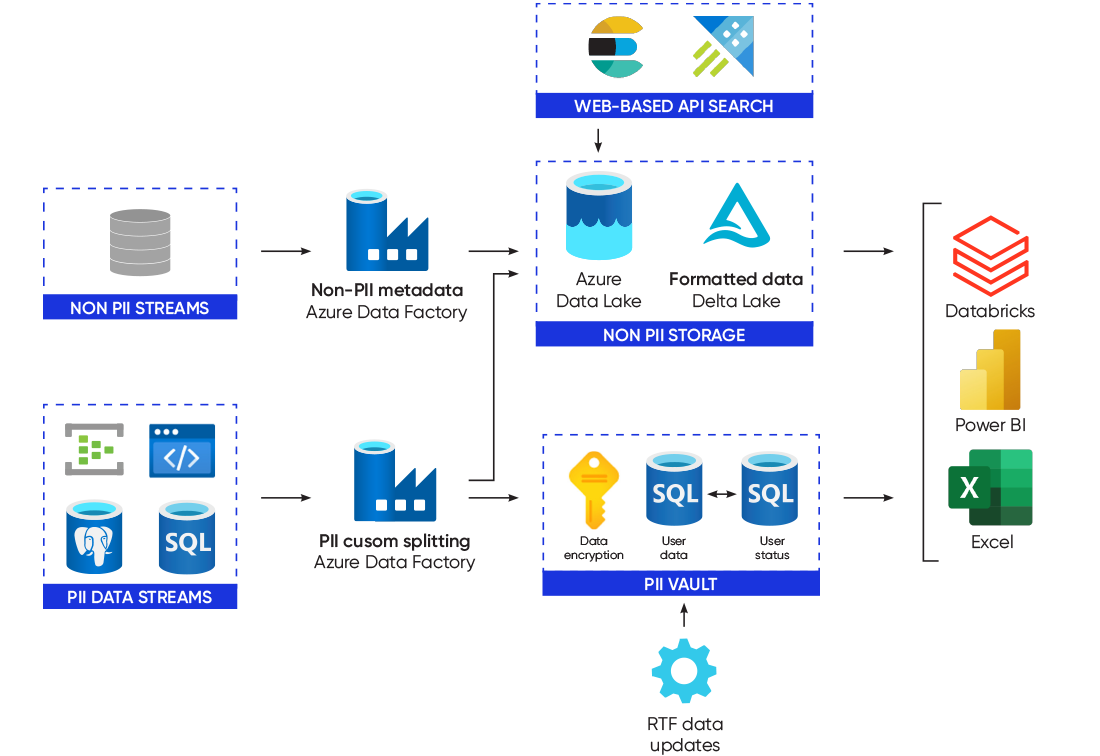

Following a project definition workshop with the client, Blueprint outlined a 30/60/90-day roadmap focused on rapidly building the foundations of a modern data estate and gathering more than 20 TBs of data from the disparate sources into a central location in the cloud.

“We created and used the Azure Data Factory metadata-driven pattern that we have spearheaded at Blueprint and steered all that data over to a data lake,” Blueprint Director of Solutions Development Eric Vogelpohl said.

Blueprint migrated the point-of-sale, mobile transaction and customer experience data into an Azure Data Lake and then implemented Databricks Delta Lake to form a “lakehouse.” Azure event hubs were configured to accept the streaming customer experience data in the data lake. All historical and real-time streaming data is now organized in the same location, allowing for querying and analysis for the first time. Queries that once took 8 to 9 minutes to run can now be completed in less than 10 seconds, and access to data has been democratized across the organization.

With all their data in a single, manageable and stable location, the company is now able to consider business decisions with a complete picture of their customers. This data is currently being used to build customer behavior metrics and profiles for use in marketing, predictive analysis and layout planning. In addition, by adopting a Databricks lakehouse pattern, the company can comply with the data erasure requirements of privacy laws like GDPR and CCPA.