The problem

Faced with astronomical volumes of video and limited human hours to spend on manual review, this agency had to massively scale its video analysts’ workflows. The agency needed to better incorporate video analytics technology into its workflows for two main reasons:

- To process more footage and quickly identify patterns and irregularities in videos from all around the world.

- To reduce the immense amount of time needed to manually match patterns or irregularities between videos

Video analytics capabilities have greatly expanded in recent years, particularly in traffic and transportation, and the agency was already working with Microsoft to better understand what was possible using advanced video analytics on Azure. But to incorporate a state-of-the-art video analytics process into its workflows, the agency required a team of data scientists dedicated to the project.

The Blueprint Way

Working with the U.S. government brings a unique set of complications because agencies cannot disclose many details about how they will use a solution. Blueprint thrives on the unknown, and through its agile, feedback-driven approach, Blueprint was able to quickly provide value to the agency while dealing with ambiguity in a sensitive data environment.

To develop this video analytics solution, Blueprint’s data science team focused on three areas: computer vision, object tracking and anomaly detection. Each of these presented its own unique set of challenges.

Computer vision

The Blueprint team recognized it was critical to choose the optimal architecture models for the solution – and there are many models out there. After testing dozens of architectures and models, the data science team chose EfficientDet Versions 0, 3 and 7 because of their balance between speed and accuracy.

“We can have a really accurate model that will take a few minutes to process a few seconds of video,” said Blueprint Data Science Solution Architect Cori Hendon. “But if processing takes longer than watching the video – you’re not helping.”

To process large quantities of video data, a solution must first recognize the objects in that data. Is an object a car or a briefcase? Focusing on cars and using clustering algorithms to identify them, Blueprint quickly built out NASH’s computer vision over two months, providing immediate value to the agency.

It soon became apparent, though, that Blueprint would not be able to get access to the large amount of data needed to train its models due to the sensitive nature of the agency’s work. Pivoting quickly, Blueprint got creative and was able to find the necessary data to train early models using Grand Theft Auto V.

To speed up the often-long video processing time, Blueprint decided to split the video into equal segments, processing them separately in parallel through the EfficientDet neural network and displaying the results immediately for an improved user experience and reduced time-to-insight. The neural network runs on a GPU-enabled cluster of VMs in the Azure cloud to allow for easy scaling and total control over the balance between performance and cost.

Object tracking

The client wanted to extract behavioral insights from video, but training models to detect a behavior requires knowing what behaviors are essential to the end-user. Facing the challenge of sensitive/classified data and use cases, Blueprint began with object tracking, going from detecting objects in a video – cars – to tracking that car’s path.

“Object tracking is a difficult problem from a computer’s perspective,” Cori said. “If a person walks behind a tree (occlusion), is the person that reappears on the other side the same person or a new person?”

Once the Blueprint team chose the correct model, they began to test different algorithms and features to include in the algorithms to provide the best outcomes for the agency. Nash’s tracker uses multiple techniques that enabled the product to identify and monitor object activity. These include Kalman filtering, joint detection and embedding (JDE) tracking and a sliding window search.

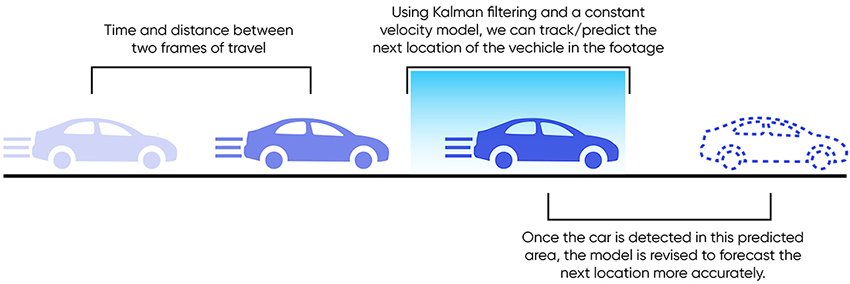

Kalman filtering is used to predict the future position of an object through direct observation of the object’s current position. It assumes a fluctuating constant velocity model that allows for slight variation in the car’s velocity to more closely resemble the actual movement of cars. This technique is based on the following formula:

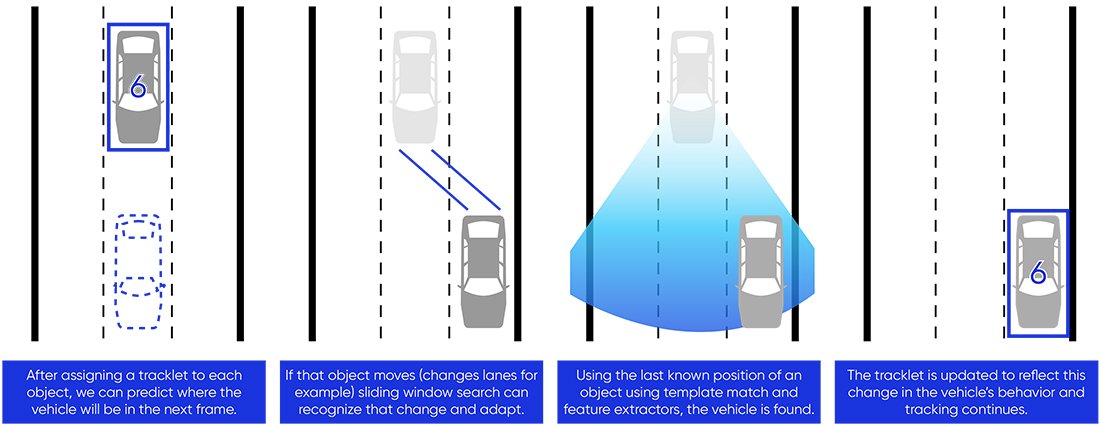

Joint detection and embedding is used in conjunction with Kalman filtering to assign a tracklet to a bounding box, or object. A tracklet is a data structure that holds information, such as a unique ID. These structures help identify lost and removed tracklets as well as keep track of active and inactive tracklets.

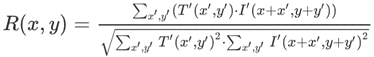

Sliding window search is used when an object is lost (i.e., the object detector fails to recognize a car in one or multiple consecutive frames). A sliding window search looks for that object around the last known position. To confirm the identity of the lost object, we use both template matching and custom feature extractors. Template matching searches for a given image inside a target image. In our case, the input image is the last know detection of the lost car, and the target image would be an area inside the frame. It’s important to note that template matching is not used on the entire frame but on a specified region centered around the last detected position of the lost object.

This technique uses the following formula:

Blueprint quickly presented its object tracking results to the agency, gaining enough trust and confidence to be provided with feedback about more behaviors the agency was interested in, which allowed for iteration and continuous improvements through the addition of more capabilities.

Anomaly detection

The ability to track objects creates data about where objects are moving and unlocks the ability to extract more behavioral data. Custom RDP decimation algorithms and time-series clustering enable the detection of common behaviors, which then allows the extraction of behavioral anomalies, such as a car pulled over to the side of the road, more cars frequenting a specific location than is typical or a person deviating from a route they regularly take. Blueprint also defined a similarity function and coded it into the algorithms, which allows the agency to quickly process all its footage to identify similar anomalies without a person having to review all the footage manually.

Results

Through computer vision, object tracking and anomaly detection, the agency’s video analysts could scale their workflows effectively and drastically increase their throughput. By beginning their review process with the anomalies NASH identified, rather than manually combing through hundreds of hours of footage, their productivity and identification capabilities vastly increased.

The result of this 2-month engagement was NASH, a product built on Microsoft Azure and available on the Azure Marketplace, that utilizes advanced analytics and machine learning technologies to enable the use of organizations’ video assets to more accurately detect and track objects in motion and extract pattern-based insights.