Prioritize. Right-size. Maximize value.

The pandemic accelerated digital transformation across industries, with businesses sacrificing optimization for speed as they raced to migrate operations to cloud infrastructure and apps in the rapid shift towards remote work.

Today’s challenges, marked by the new normal, a looming recession, persistent inflation, layoffs, rising prices, and an ongoing supply chain crisis, have elevated cloud cost optimization to a pressing concern—with solutions like Databricks emerging as particularly effective.

Databricks is one of the most powerful platforms available to companies leveraging data to run faster, smarter, and leaner. The Lakehouse on Delta powers analytics, applications, and AI/ML at massive scale, delivering the best price-to-performance ratio of any data platform on the market. Yet, any technology solution has its challenges and tradeoffs in cost and performance.

As an engineer, you’re making cloud infrastructure and coding decisions without full visibility into the costs involved. With invoices likely going unseen and cloud spend being scrutinized by leadership and finance teams pushing for accountability, it’s vital to control costs and track this operational metric.

What are your goals? Saving costs? Improving margins? Growing revenue and customer base? Pivoting your business model? Improving the productivity of your pipelines? The digital imperative to leverage technology to do more with less is more critical than ever.

To effectively address this, you need a comprehensive solution that combines a robust Databricks monitoring tool like the Lakehouse Optimizer powered by Blueprint with expert-level knowledge of Spark. This powerful combination will enable you to optimize performance and contain cloud costs while also accelerating your business and data intelligence use cases.

Cloud Cost Optimization Starts with Monitoring

Cloud cost management is the tracking, reporting, and allocation of cloud spending. Taking it a step further, cloud cost optimization is about taking the necessary actions to extract value from your investments. Examples include eliminating mismanaged resources, reducing waste, securing reserved capacity discounts, and precisely scaling your environment and computing services. It’s a comprehensive approach to minimizing cloud spend while maximizing return on investments.

When you’re responsible for managing cloud spend as an operational metric, the ability to make informed decisions hinges on having access to detailed data and actionable insights. To stay ahead of issues, your cloud monitoring strategy and tools must be able to detect and alert you to failures, anomalies, and waste in real-time, enabling you to take swift and effective action. With this granular level of visibility, you can proactively optimize your cloud environment, ensuring maximum performance and cost-effectiveness.

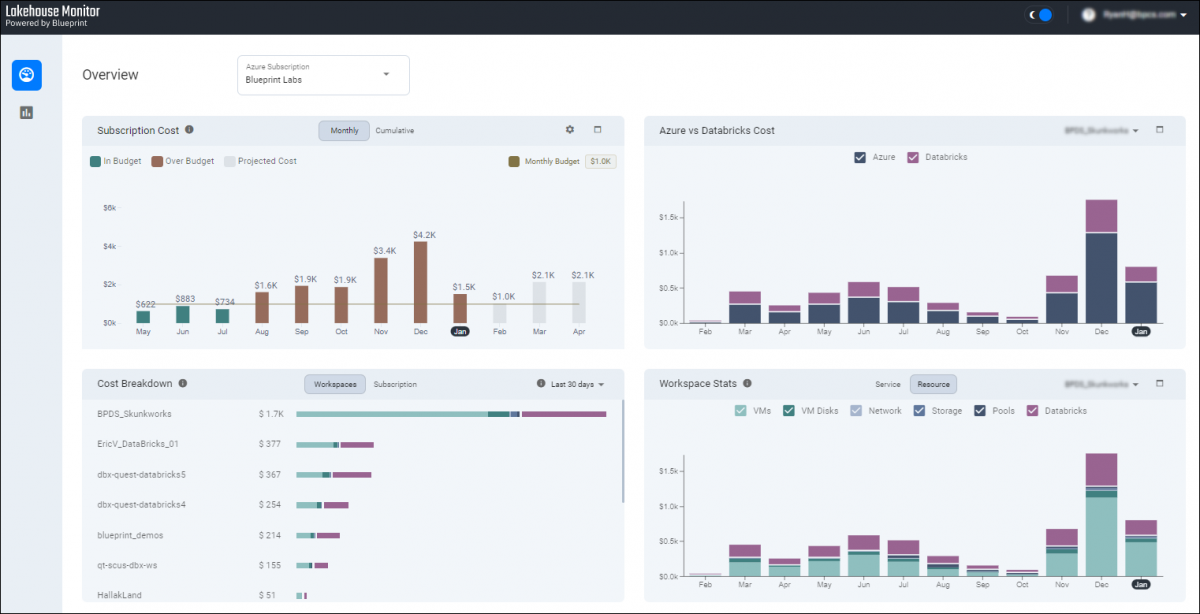

Cloud optimization tools like the Lakehouse Optimizer powered by Blueprint provide the most granular visibility into your cloud infrastructure and Databricks performance and costs.

Why DevOps, FinOps, and Leadership Care About Cloud Costs

Cloud costs directly impact your bottom line, making it essential to clearly understand how engineering decisions impact business outcomes. DevOps is responsible for keeping systems, including cloud performance and costs, running smoothly to ensure customers have a positive experience. By gaining real-time visibility into cloud costs and performance, engineers can quickly identify and address issues before they become problematic to mitigate risk and maximize benefits.

- Cost Savings: The main driver behind cloud cost optimization is to get more value out of existing investments. Improving utilization enables you to clear headroom for growth in additional use cases.

- Visibility: Granular visibility and transparency into real-time cloud costs and performance enables you to stay better aligned with business objectives, which also keeps you accountable and positively impacts your security and privacy posture.

- Predictability: When it comes to cost and performance, no one likes surprises or unexpected fluctuations. This is where real-time cloud monitoring tools can be extremely useful. More than just real-time alerts, trustworthy forecasting is imperative.

- Efficiency: Unused resources are a costly waste. Implementing load balancing and automatic scaling can optimize cloud computing resources at scale and ensure that you are getting the most out of your investment.

- Performance: Your website and applications are how customers interact with your products and services—speed and performance matters. The performance of your underlying cloud, data and AI platform not only impacts your customers, but your internal processes and mission critical workloads as well. How well your applications, workloads and databases operate on the cloud can make or break your business. Choosing the right fit for your business avoids excessive spending or subpar results and ensures seamless operations, speed, and the highest customer satisfaction.

- Justification: Allocating actual costs to the functions and applications that are driving them ensures that you know where every dollar is going and that it is adding value.

6 Quick Wins for Cloud Cost Optimization

The strategies below can be implemented in a matter of weeks, delivering quick wins for cost and performance optimization.

- Start with Lakehouse Optimization and Assessment: Often your invoice and billing data lack meaningful details. Before you make long term cloud provider commitments, it’s best to assess and monitor your environment and resources (i.e., cloud infrastructure, databases, servers, storage, applications, websites, games, containers, hypervisors, code repositories, etc.) to determine the best course of action. With the Lakehouse Optimizer powered by Blueprint, you can get up and running with real-time data within hours. Armed with utilization data, you are able to make smart decisions about spending commitments, enterprise agreements, and reserved instances. Likewise, the granular level of performance data enables engineering to prioritize courses of action, which increases efficiency and productivity across the board.

- Create an Optimization Plan: The assessment provides a granular level of performance and data intelligence that enables engineering to establish a baseline and identify the biggest opportunities for improvement. Then you can prioritize actions, create an optimization plan, and set timelines. Common optimization techniques may include: 1) finding unused resources; 2) consolidating idle resources; 3) leveraging heat maps; 4) right-sizing computing services; 5) investing in Reserved Instances; 6) monitoring and correcting cost and performance anomalies; and 7) multi-cloud vs. single cloud (more on these in future blogs).

- Auto-scaling: Manual processes take time and energy that your team may not have. That’s where autoscaling or on-demand scalability comes in. Modern platforms can rapidly scale usage up or down as your business requires more or fewer resources.

- Optimize Software License Usage and Costs: Software licenses can be a large portion of your cloud costs. There may be opportunities to bring your own licenses to save on licensing fees (e.g., Microsoft Windows, SQL Server). Some licenses can be more expensive to run in some clouds due to licensing restrictions and complexities. Evaluate and determine the best options.

- Establish a Center of Excellence for Continuous Optimization: When you get an invoice after the fact, it’s too late to do anything about it. The ability to continuously monitor and analyze your cloud health and costs in real time keeps you agile, resilient, and ready to act. Cost optimization should be an ongoing exercise for all phases of your production and development lifecycles.

Planning: Use cost and performance data to inform your budget and roadmap. Identify and prioritize the areas where your team should spend their time and apply fixes that maximize cost and productivity gains.

Deployment: Quickly identify and eliminate inefficiencies in the deployment cycle (i.e., provisioning, application deployment, and load balancing) that drive up cost.

Design and Build: To make informed and cost-effective infrastructure decisions, you need to be able to report on unit cost and planned spend. This allows you to be smart about scaling for growth.

Monitoring: Every decision has a cost. Continuous monitoring allows you to quickly assess spend by team, feature, or product, and iterate on optimization. - Find a Partner with Deep Spark Expertise: Wherever you are in your data journey, it’s to your advantage to have a system integrator or technology partner with Spark domain expertise, especially one who specializes in data migration, optimization, and data science (AI/ML) use cases. Having a Spark expert in your back pocket keeps you ready to act on business and data intelligence initiatives, large and small, at a moment’s notice—that’s agility and resiliency.

Right Data, Right People, Right Time

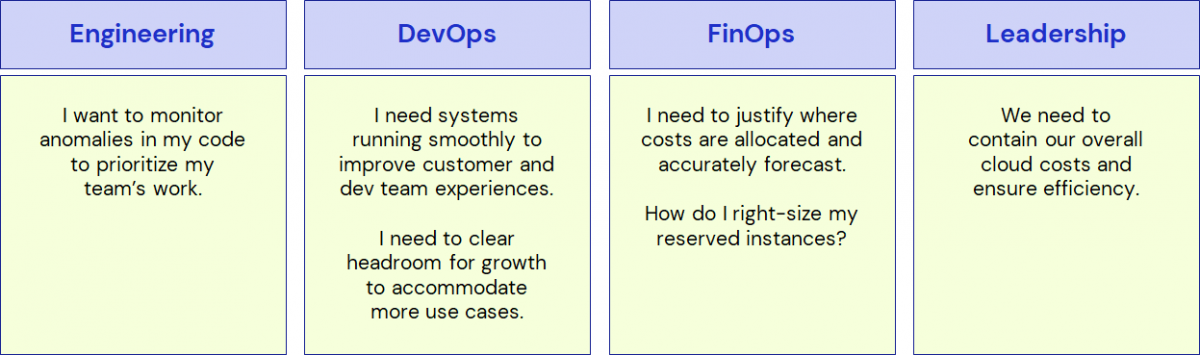

Engineers need to compare and contrast data in the development lifecycle. DevOps needs to slice and dice granular data to fix problems faster. FinOps cares more about data for forecasting, such as how much a bill will go up for each incremental customer. And leadership teams are eyeing cloud costs as a KPI. Whether it’s engineering, DevOps, FinOps, or leadership, they’re looking at the same data but it’s presented differently and they’re using it in different ways.

The more that teams have access to the right data at the right time, the faster and more effectively they will be at making timely changes that positively impact quality and the bottom line. That’s speeding time to value.

What’s Next?

Without visibility into cloud costs and performance, it’s impossible to identify anomalies and prioritize work. With cloud costs as a key metric, DevOps and FinOps are empowered to make data-driven business decisions, not solely engineering-driven ones, such as allocating resources that align to revenue and prioritizing efforts based on business outcomes.

The best way to achieve visibility and maximize performance is to invest in a solution that helps developers and engineers understand the cost of their utilization, while providing finance and executives with actionable insights they need to understand where money is being spent, the justification behind it, and how it impacts the bottom line.

What do you want to do today?