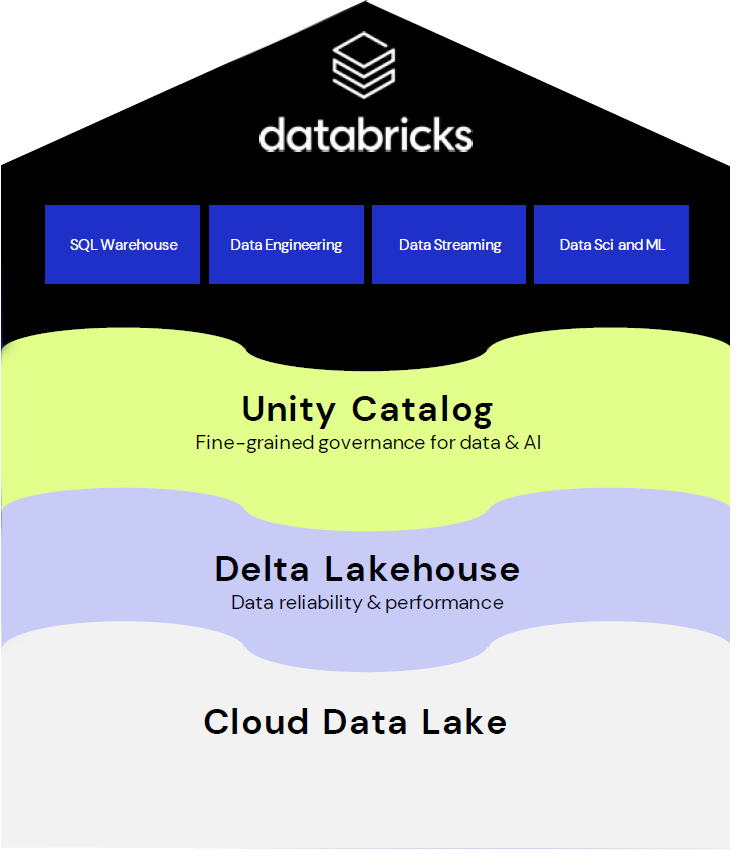

DATABRICKS CENTER OF EXCELLENCE

Greenfield Lakehouse QuickStart

Benefits

- Accelerate high-value analytics initiatives and gain experience with Databricks.

- Get up and running on Databricks in an accelerated timeline.

- Complete two (2) full data pipelines and analyze with SQL analytics and BI tools.

QuickStart overview

Introduce

a data team to the Lakehouse

Prepare

Databricks & data services, non-prod env.

Complete

two data full pipelines

Analyze

with SQL Analytics and BI tools

Quickstart timeline

Stage 1

Lakehouse 101

- Data Acquisition

- Simple Data Transformations

- Organizing Data

- Security

- BI, Reporting

- Optimizing Costs & Management

Stage 2-3

Up & running

- Implementation → Powered by Infra-as-Code

- Security Config → Blueprint Security Rapid Config

- Lakehouse Optimization → Blueprint Lakehouse Optimizer

Stage 4-5

Data pipelines

- Identify Data Sources

- Historical & Current Data

- Data Transformations

- Data Quality

- Data Set Creation, Scheduled

- Tables in the Lakehouse, Ready!

Stage 8-10

BI & analysis, optimize, & roadmap

- Power BI or Tableau

- DBSQL for Ad hoc Analysis

- Dashboards & Reports

- Utilization Management

- Roadmap the Future

Deployment journey

Trial activation

- SaaS platform trial enabled

- No inbound cloud firewall blocks

AWS or Azure readiness

- Account with create-rights

- Terraform installed

QuickStart data sources

- Cloud accessible services identified

- Read-only account

Scripted build

- Resource / Admin groups

- Storage, Databricks workspace, clusters

- Unity Catalog / Metastore / Permissions

- Blueprint Lakehouse Optimizer

Sample data & notebooks

- Samples deployed

- 3 notebooks deployed

Workflows active

- Enable job/workflows, scheduled

Databricks is LIVE!

- SQL analysis workshop

- Power BI / Tableau

- Engineering & workflow demos

Lakehouse optimized

- Monitor jobs

- Understand costs

- Identify orphaned workloads

Deliverables

Build a net-new use case and validate

cost vs performance and usability of Databricks platform.

- TCO and performance projection report

- Established Databricks Lakehouse environment

- Data ingestion pipeline

- Platform utilization monitoring app

- Working end-to-end use case/business process

deployed to non-production environment - Results report and demonstration